Add AI-powered generation capabilities to your CE.SDK application for generating images, videos, audio, and text using the @imgly/plugin-ai-apps-web package.

This tutorial will guide you through integrating AI-powered generation capabilities into your CreativeEditor SDK application using the @imgly/plugin-ai-apps-web package. You’ll learn how to set up various AI providers for generating images, videos, audio, and text.

This guide covers installing AI generation packages, initializing CE.SDK with video mode, configuring the AI dock and canvas menu, setting up text, image, video, and audio providers, implementing middleware for custom processing, controlling features with the Feature API, and setting up proxy servers for secure API communication.

Prerequisites#

- Basic knowledge of JavaScript/TypeScript and React

- Familiarity with CreativeEditor SDK

- API keys for AI services (Anthropic, fal.ai, ElevenLabs, etc.)

1. Project Setup#

First, set up your project and install the necessary packages:

# Initialize a new project or use an existing onenpm install @cesdk/cesdk-jsnpm install @imgly/plugin-ai-apps-web

# Install individual AI generation packages as needednpm install @imgly/plugin-ai-image-generation-webnpm install @imgly/plugin-ai-video-generation-webnpm install @imgly/plugin-ai-audio-generation-webnpm install @imgly/plugin-ai-text-generation-webImport the providers from their respective packages:

// Import providers from individual AI generation packagesimport Elevenlabs from '@imgly/plugin-ai-audio-generation-web/elevenlabs';import FalAiImage from '@imgly/plugin-ai-image-generation-web/fal-ai';import OpenAiImage from '@imgly/plugin-ai-image-generation-web/open-ai';import Anthropic from '@imgly/plugin-ai-text-generation-web/anthropic';import FalAiVideo from '@imgly/plugin-ai-video-generation-web/fal-ai';

// Import middleware utilitiesimport { uploadMiddleware } from '@imgly/plugin-ai-generation-web';2. Initialize CE.SDK#

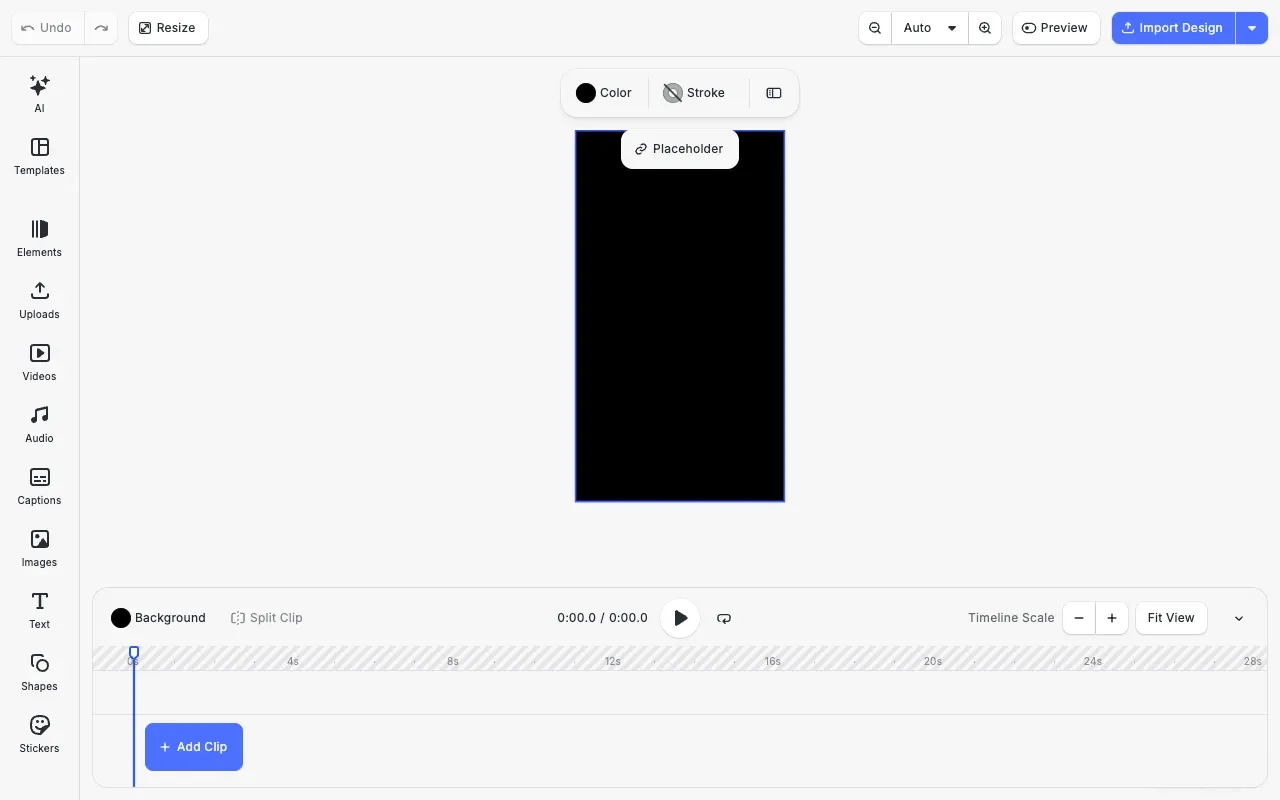

Initialize CE.SDK with Video mode to utilize all AI capabilities:

await cesdk.addPlugin(new VideoEditorConfig());

// Add asset source pluginsawait cesdk.addPlugin(new BlurAssetSource());await cesdk.addPlugin(new CaptionPresetsAssetSource());await cesdk.addPlugin(new ColorPaletteAssetSource());await cesdk.addPlugin(new CropPresetsAssetSource());await cesdk.addPlugin( new UploadAssetSources({ include: [ 'ly.img.image.upload', 'ly.img.video.upload', 'ly.img.audio.upload' ] }));await cesdk.addPlugin( new DemoAssetSources({ include: [ 'ly.img.templates.video.*', 'ly.img.image.*', 'ly.img.audio.*', 'ly.img.video.*' ] }));await cesdk.addPlugin(new EffectsAssetSource());await cesdk.addPlugin(new FiltersAssetSource());await cesdk.addPlugin( new PagePresetsAssetSource({ include: [ 'ly.img.page.presets.instagram.*', 'ly.img.page.presets.facebook.*', 'ly.img.page.presets.x.*', 'ly.img.page.presets.linkedin.*', 'ly.img.page.presets.pinterest.*', 'ly.img.page.presets.tiktok.*', 'ly.img.page.presets.youtube.*', 'ly.img.page.presets.video.*' ] }));await cesdk.addPlugin(new StickerAssetSource());await cesdk.addPlugin(new TextAssetSource());await cesdk.addPlugin(new TextComponentAssetSource());await cesdk.addPlugin(new TypefaceAssetSource());await cesdk.addPlugin(new VectorShapeAssetSource());

await cesdk.actions.run('scene.create', { mode: 'Video', page: { sourceId: 'ly.img.page.presets', assetId: 'ly.img.page.presets.instagram.story' }3. Configure UI Components#

AI Dock Button#

The main entry point for AI features is the AI dock button. Position it at the beginning of the dock:

// Configure AI Apps dock positioncesdk.ui.setComponentOrder({ in: 'ly.img.dock' }, [ 'ly.img.ai.apps.dock', ...cesdk.ui.getComponentOrder({ in: 'ly.img.dock' })]);Canvas Menu Options#

AI text and image transformations are available in the canvas context menu:

// Add AI options to canvas menucesdk.ui.setComponentOrder({ in: 'ly.img.canvas.menu' }, [ 'ly.img.ai.text.canvasMenu', 'ly.img.ai.image.canvasMenu', ...cesdk.ui.getComponentOrder({ in: 'ly.img.canvas.menu' })]);4. Add the AI Apps Plugin#

Configure the AI Apps plugin with all providers:

// Add the AI Apps plugin with all providerscesdk.addPlugin( AiApps({ // IMPORTANT: dryRun mode simulates generation without API calls // Perfect for testing and development - remove for production use dryRun: true, providers: { // Text generation and transformation text2text: Anthropic.AnthropicProvider({ proxyUrl: 'http://your-proxy-server.com/api/proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' }, // Optional: Configure default property values properties: { temperature: { default: 0.7 }, maxTokens: { default: 500 } } }),

// Image generation - Multiple providers with selection UI text2image: [ FalAiImage.RecraftV3({ proxyUrl: 'http://your-proxy-server.com/api/proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' }, // Add upload middleware to store generated images on your server middleware: [ uploadMiddleware(async (output) => { // Upload the generated image to your server const result = await uploadToYourStorageServer(output.url);

// Return the output with your server's URL return { ...output, url: result.permanentUrl }; }) ] }), // Alternative with icon style support FalAiImage.Recraft20b({ proxyUrl: 'http://your-proxy-server.com/api/proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' }, // Configure dynamic defaults based on style type properties: { style: { default: 'broken_line' }, image_size: { default: 'square_hd' } } }), // Additional image provider for user selection OpenAiImage.GptImage1.Text2Image({ proxyUrl: 'http://your-proxy-server.com/api/proxy', headers: { 'x-api-key': 'your-key', 'x-request-source': 'cesdk-tutorial' } }) ],

// Image-to-image transformation image2image: FalAiImage.GeminiFlashEdit({ proxyUrl: 'https://your-server.com/api/fal-ai-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' } }),

// Video generation - Multiple providers text2video: [ FalAiVideo.MinimaxVideo01Live({ proxyUrl: 'https://your-server.com/api/fal-ai-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' } }), FalAiVideo.PixverseV35TextToVideo({ proxyUrl: 'https://your-server.com/api/fal-ai-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' } }) ], image2video: FalAiVideo.MinimaxVideo01LiveImageToVideo({ proxyUrl: 'https://your-server.com/api/fal-ai-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' } }),

// Audio generation text2speech: Elevenlabs.ElevenMultilingualV2({ proxyUrl: 'https://your-server.com/api/elevenlabs-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' } }), text2sound: Elevenlabs.ElevenSoundEffects({ proxyUrl: 'https://your-server.com/api/elevenlabs-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' } }) } }));Testing with Dry-Run Mode#

During development, use dryRun: true to simulate AI generation without making actual API calls:

// IMPORTANT: dryRun mode simulates generation without API calls// Perfect for testing and development - remove for production usedryRun: true,This helps verify your integration and UI flows without incurring API costs or requiring valid API keys.

5. AI Provider Configuration#

Each AI provider type serves a specific purpose and creates different types of content:

Text Generation (Anthropic)#

// Text generation and transformationtext2text: Anthropic.AnthropicProvider({ proxyUrl: 'http://your-proxy-server.com/api/proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' }, // Optional: Configure default property values properties: { temperature: { default: 0.7 }, maxTokens: { default: 500 } }}),The text provider enables capabilities like:

- Improving writing quality

- Fixing spelling and grammar

- Making text shorter or longer

- Changing tone (professional, casual, friendly)

- Translating to different languages

- Custom text transformations

Image Generation#

Configure multiple image providers with selection UI:

// Image generation - Multiple providers with selection UItext2image: [ FalAiImage.RecraftV3({ proxyUrl: 'http://your-proxy-server.com/api/proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' }, // Add upload middleware to store generated images on your server middleware: [ uploadMiddleware(async (output) => { // Upload the generated image to your server const result = await uploadToYourStorageServer(output.url);

// Return the output with your server's URL return { ...output, url: result.permanentUrl }; }) ] }), // Alternative with icon style support FalAiImage.Recraft20b({ proxyUrl: 'http://your-proxy-server.com/api/proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' }, // Configure dynamic defaults based on style type properties: { style: { default: 'broken_line' }, image_size: { default: 'square_hd' } } }), // Additional image provider for user selection OpenAiImage.GptImage1.Text2Image({ proxyUrl: 'http://your-proxy-server.com/api/proxy', headers: { 'x-api-key': 'your-key', 'x-request-source': 'cesdk-tutorial' } })],

// Image-to-image transformationimage2image: FalAiImage.GeminiFlashEdit({ proxyUrl: 'https://your-server.com/api/fal-ai-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' }}),When multiple providers are configured, users will see a selection box to choose between them.

Image generation features include:

- Creating images from text descriptions

- Multiple style options (realistic, illustration, vector)

- Various size presets and custom dimensions

- Transforming existing images based on text prompts

Video Generation#

// Video generation - Multiple providerstext2video: [ FalAiVideo.MinimaxVideo01Live({ proxyUrl: 'https://your-server.com/api/fal-ai-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' } }), FalAiVideo.PixverseV35TextToVideo({ proxyUrl: 'https://your-server.com/api/fal-ai-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' } })],image2video: FalAiVideo.MinimaxVideo01LiveImageToVideo({ proxyUrl: 'https://your-server.com/api/fal-ai-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' }}),Video generation capabilities include:

- Creating videos from text descriptions

- Transforming still images into videos

- Fixed output dimensions (typically 1280×720)

- 5-second video duration

Audio Generation (ElevenLabs)#

// Audio generationtext2speech: Elevenlabs.ElevenMultilingualV2({ proxyUrl: 'https://your-server.com/api/elevenlabs-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' }}),text2sound: Elevenlabs.ElevenSoundEffects({ proxyUrl: 'https://your-server.com/api/elevenlabs-proxy', headers: { 'x-client-version': '1.0.0', 'x-request-source': 'cesdk-tutorial' }})Audio generation features include:

- Text-to-speech with multiple voices

- Multilingual support

- Adjustable speaking speed

- Sound effect generation from text descriptions

- Creating ambient sounds and effects

6. Using Middleware#

The AI generation framework supports middleware that can enhance or modify the generation process. Middleware functions are executed in sequence and can perform operations before generation, after generation, or both.

Upload Middleware#

The uploadMiddleware is useful when you need to store generated content on your server before it’s used. First, create a helper function for your storage server:

/** * Upload to your image storage server. * Replace this mock with your actual storage API call. */async function uploadToYourStorageServer(imageUrl: string) { // In production, upload the image to your server: // const response = await fetch('https://your-server.com/api/store-image', { // method: 'POST', // headers: { 'Content-Type': 'application/json' }, // body: JSON.stringify({ // imageUrl, // metadata: { source: 'ai-generation' } // }) // }); // return await response.json();

// Mock: Return a fake response return { permanentUrl: imageUrl };}Then use uploadMiddleware to process generated outputs before they’re added to the scene:

// Add upload middleware to store generated images on your servermiddleware: [ uploadMiddleware(async (output) => { // Upload the generated image to your server const result = await uploadToYourStorageServer(output.url);

// Return the output with your server's URL return { ...output, url: result.permanentUrl }; })]Use cases for upload middleware:

- Storing generated assets in your own cloud storage

- Adding watermarks or processing assets before use

- Tracking/logging generated content

- Implementing licensing or rights management

Rate Limiting Middleware#

To prevent abuse of AI services, you can implement rate limiting:

import { rateLimitMiddleware } from '@imgly/plugin-ai-generation-web';

// In your provider configurationmiddleware: [ rateLimitMiddleware({ maxRequests: 10, timeWindowMs: 60 * 60 * 1000, // 1 hour onRateLimitExceeded: (input, options, info) => { // Show a notice to the user console.log(`Rate limit reached: ${info.currentCount}/${info.maxRequests}`); return false; // Reject the request } })]Custom Error Handling Middleware#

You can create custom middleware for error handling:

const errorMiddleware = async (input, options, next) => { try { return await next(input, options); } catch (error) { // Handle error (show UI notification, log, etc.) console.error('Generation failed:', error); // You can rethrow or return a fallback throw error; }};Middleware Order#

The order of middleware is important - they’re executed in the sequence provided:

middleware: [ // Executes first rateLimitMiddleware({ maxRequests: 10, timeWindowMs: 3600000 }),

// Executes second (only if rate limit wasn't exceeded) loggingMiddleware(),

// Executes third (after generation completes) uploadMiddleware(async (output) => { /* ... */ })]7. Controlling Features with Feature API#

You can control which AI features are available to users using CE.SDK’s Feature API:

// Control AI features with Feature API// Disable specific quick actionscesdk.feature.enable( 'ly.img.plugin-ai-image-generation-web.quickAction.editImage', false);cesdk.feature.enable( 'ly.img.plugin-ai-text-generation-web.quickAction.translate', false);

// Control input types for image/video generationcesdk.feature.enable( 'ly.img.plugin-ai-image-generation-web.fromText', true);cesdk.feature.enable( 'ly.img.plugin-ai-image-generation-web.fromImage', false);

// Hide provider selection dropdownscesdk.feature.enable( 'ly.img.plugin-ai-image-generation-web.providerSelect', false);

// Control style groups for specific providerscesdk.feature.enable( 'ly.img.plugin-ai-image-generation-web.fal-ai/recraft-v3.style.vector', false);This is useful for:

- Creating different feature tiers for different user groups

- Simplifying the UI by hiding unused features

- Temporarily disabling features during maintenance

For more details on available feature flags, see the @imgly/plugin-ai-generation-web documentation.

8. Proxy Server Configuration#

For security reasons, you should never include your AI service API keys directly in client-side code. Instead, you should set up proxy services that securely forward requests to AI providers while keeping your API keys secure on the server side.

Each AI provider configuration requires a proxyUrl parameter, which should point to your server-side endpoint that handles authentication and forwards requests to the AI service:

text2image: FalAiImage.RecraftV3({ proxyUrl: 'http://your-proxy-server.com/api/proxy'});Your proxy server should handle authentication, forward requests to the appropriate AI service providers, and manage response streaming for optimal performance.

API Reference#

| Method | Category | Purpose |

|---|---|---|

cesdk.addPlugin() | Plugin | Register and initialize the AI Apps plugin with CE.SDK |

AiApps() | Plugin | Create unified plugin instance with all AI provider types |

Anthropic.AnthropicProvider() | Text | Configure Claude for text generation and transformation |

FalAiImage.RecraftV3() | Image | Configure RecraftV3 text-to-image with vector/raster support |

FalAiImage.Recraft20b() | Image | Configure Recraft20b text-to-image with icon styles |

FalAiImage.GeminiFlashEdit() | Image | Configure Gemini Flash for image-to-image transformation |

OpenAiImage.GptImage1.Text2Image() | Image | Configure GPT Image for text-to-image generation |

FalAiVideo.MinimaxVideo01Live() | Video | Configure Minimax Video for text-to-video generation |

FalAiVideo.MinimaxVideo01LiveImageToVideo() | Video | Configure Minimax Video for image-to-video transformation |

FalAiVideo.PixverseV35TextToVideo() | Video | Configure Pixverse for text-to-video generation |

Elevenlabs.ElevenMultilingualV2() | Audio | Configure ElevenLabs for multilingual text-to-speech |

Elevenlabs.ElevenSoundEffects() | Audio | Configure ElevenLabs for sound effect generation |

uploadMiddleware() | Middleware | Process and store generated outputs before use |

rateLimitMiddleware() | Middleware | Limit generation requests per time window |

cesdk.feature.enable() | Feature | Control visibility of AI features and providers |

cesdk.ui.setComponentOrder({ in: 'ly.img.dock' }, order) | UI | Position AI Apps button in the dock |

cesdk.ui.setComponentOrder({ in: 'ly.img.canvas.menu' }, order) | UI | Add AI options to canvas context menu |

engine.asset.findAssets() | Asset | Query generated assets from provider history sources |

Next Steps#

- Proxy Server — Set up secure API communication for production

- Text Generation — Deep dive into text generation and transformation

- Image Generation — Advanced image generation configuration

- Video Generation — Video generation with multiple providers

- Audio Generation — Text-to-speech and sound effects

- Custom Provider — Create custom AI providers

- Asset Library Basics — Work with generated assets