Introduction

You may know this situation: You are out on a trip when suddenly a unique opportunity for a photograph appears, like a wild animal showing up or sun rays breaking through the rain clouds for a few seconds. Without hesitation, you grab your camera and capture the sight. Later you discover that a distracting object, like a road sign, is ruining your shot. Time for some cumbersome retouching.

Now, imagine you could erase the distracting object just by highlighting it. Wonderful! From the field of deep learning, a technique for image manipulation called Image Inpainting makes it possible. Image Inpainting aims to cut out undesired parts of an image and fills up missing information with plausible content of patterns, colors, and textures that match the surrounding.

Today we would like to share experiences that we have gained during the application of deep learning inpainting approaches. Furthermore, we’ll present some quality optimization steps that we have implemented to improve results addressing the transformation to high-resolution outputs. But let us start with a quick introduction: who are we and why are we concerned with these kinds of topics?

We are a small consortium consisting of the Bochumer Institute of Technology, a research institute aiming to transfer knowledge from academia into industry, and the company IMG.LY, a team of software engineers and designers developing creative tools like the PhotoEditor SDK and the UBQ engine. Together we are working in the EFRE.NRW funded research project KI Design that targets artificial intelligence (AI) and deep learning-based algorithms for image content analysis and modification, as well as a leveraging tool kit for aesthetic improvements.

Image Inpainting has been a viable technique in image processing for quite some time, even before "Artificial Intelligence" was on everyone's lips. Common for most inpainting algorithms is that an area of an image is highlighted to be corrected. Many conventional algorithms then analyze the statistical distribution to fill the resulting gap by finding and using nearest neighbor patches. The most famous and state of the art approach of this method is the PatchMatch algorithm. It uses a fast, structured randomized search to identify the approximate nearest neighbor patches that will fill in the respective part of the image.

However, there are two drawbacks: first, regardless of the approximation, performance still might be an issue, and second, the results suffer from a lack of semantic understanding of the scene. Thus, research dived into new ideas and directions and tried the application and implementation of AI- and neural network-based approaches to solving these issues. For us, the removal of annoying background content is a useful feature, as it improves the overall image aesthetic. Having this available on mobile devices would be particularly interesting. Due to performance limitations and ever-improving integrated cameras, a mobile solution requires a fast and lightweight model architecture as well as the ability to process high-resolution images.

Summarized, our expectation for an AI-based inpainting algorithm are:

- removal of (manually highlighted) background objects/persons

- feasibility to process high-resolution images

- fast and lightweight network (applicable for smartphones)

The number of publications addressing this or similar requirements has increased enormously in recent years. After digging into the literature, we identified two promising approaches and tested them.

Testing and Comparing Model Architectures

These selected networks were based on the latest scientific findings and appeared to provide high-quality output. Both approaches have well-documented repositories – a special thank you to the authors for their great work (of repositories and papers as well)! The selected networks are:

- Partial Convolutional Neural Networks (PCONV); [paper, github-repository]

- Generative Multi-column Convolutional Neural Networks (GMCNN); [paper, github-repository]

You may be wondering why exactly we chose these models for comparison purposes, as the latest scientific findings sound a bit vague. Indeed, it is considerably difficult to identify the best fitting model architecture. As far as we know, there is no standardized validation method or data set. Most papers demonstrate their results on self-selected test images and further compare them with again self-selected approaches. The only option we had was to evaluate models that seemed reasonable to us. A validation method or standardized test set could be a valuable scientific contribution here. Let’s turn back to the selected models. The PCONV network uses multiple convolutional layers and adds a partial convolutional layer. The key feature is that the convolution does not consider invalid pixels, indicated by an updating mask. This prevents the algorithm from picking up the color of the mask (typically the average color tone of the image) and transmit it into the reconstruction process.

The GMCNN – a GAN-based model – is built in a special architecture consisting of 3 networks: a generator, split up into three branches addressing different feature levels, a local and global discriminator, a VGG19 net calculating the implicit diversified Markov random field (ID-MRF), introduced in the paper. This ID-MRF serves as a loss term comparing generated content with nearest-neighbor patches of the ground truth image. While the interaction of all three networks is required in the training phase, only the generative network serves for testing and production. More details and figures regarding the model architecture are available in the official paper.

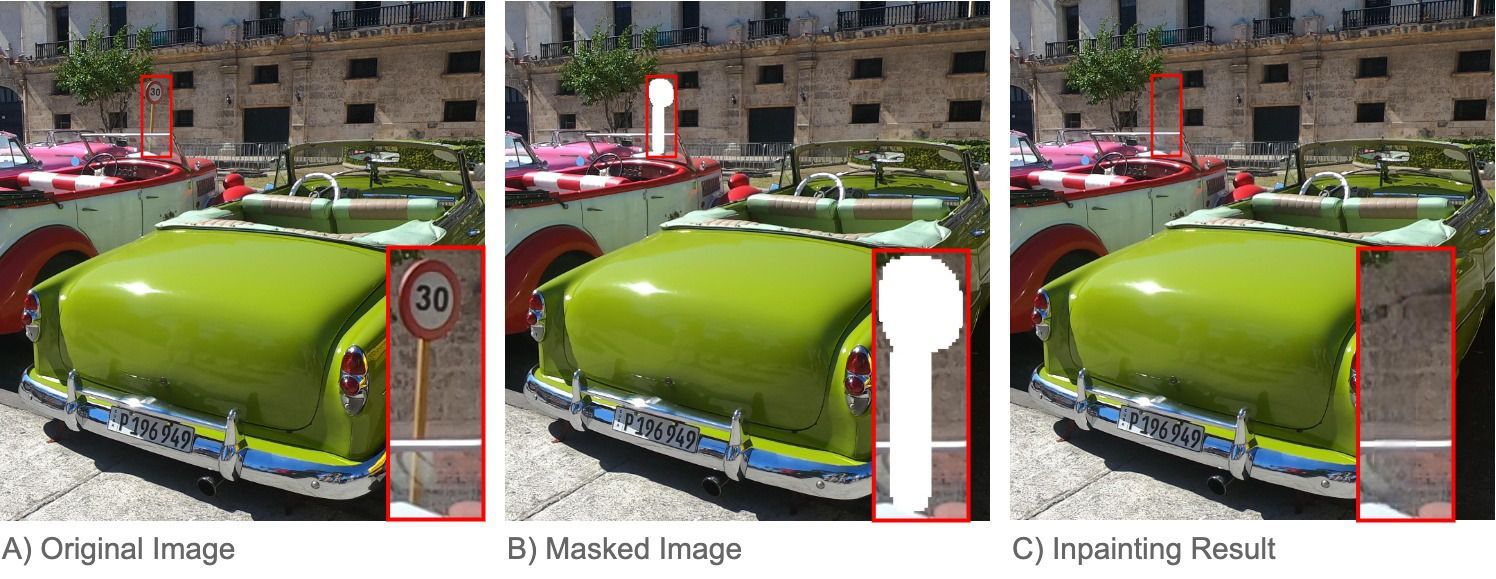

Due to the lack of standardized sets, we created our own test sets addressing different levels of complexity. This also included image data requiring an understanding of semantic structures. In our comparison, we paid special attention to ensuring the filled content was harmonious, and a possible artifact interspersion was reduced to a minimum. In particular, image artifacts could raise issues in terms of translation with respect to high-resolution information. Here is an example output of our tests:

In comparison, the inpainting result based on PCONV suffered from some blurred artifacts and erratically deviating shades, cf. Figure 1C, whereas the GMCNN-based result appeared to be more precise and plausible concerning the semantic context, cf. Figure 1D. You can see this clearly when you look at the grille door that was covered by a person. The GMCNN approach, cf Figure 1D, had recognized and respected the grid structure, while the PCONV overlayed this with a uniform (black) color tone. In consideration of all test data results, we decided to follow up with the GMCNN.

However, we would like to emphasize that this does not mean that one model architecture is better suited for Image Inpainting than the other. The used weights build-up of the PCONV architecture may achieve similar results with further training or different test sets.

What About High-Resolution Inpainting?

At the current state of the model, the processing of high-resolution images remains uncovered. Out of all the papers and repositories we found, even papers promising high-resolution often just targeted image sizes of 1024x1024 pixel at maximum. Our expectations were a resolution of substantially more than 2000x2000 pixels. A reason for this issue seemed to be the hardware demanding and time-consuming training phase when processing high-resolution images.

Furthermore, the application of a high-resolution inpainting model could entail performance issues. These are not neglectable to us, as we are facing a prospective implementation on smartphones that can't keep up with the power of a modern graphics card. Thus, an additional challenge is a high-quality transformation of low-resolution outputs to a high-resolution.

Apply Low-Resolution Inpainting Output to High-Resolution Images

The GMCNN model was trained with the Places dataset, formatted in a 512x680 resolution. Feeding in high-resolution images would exceed the training input size by far and further require information of feature dimensions that the model has never seen before. That could result in almost completely distorted reconstructions.

A straightforward solution is to downscale the high-resolution image before feeding it to the model and then resize the result up to the original image size conclusively. Due to the upscaling (e.g., via bicubic interpolation), the image details suffer from a loss of quality. Therefore a better approach is to take only the masked areas of the upscaled inpainting prediction and stitch it back into the original image. That prevents the loss of initially known details from the unmasked regions. For the maintained inpainting regions, the lack of image details, as well as the artifacts and distortions, pose a complex challenge that we aimed to overcome with the following approaches.

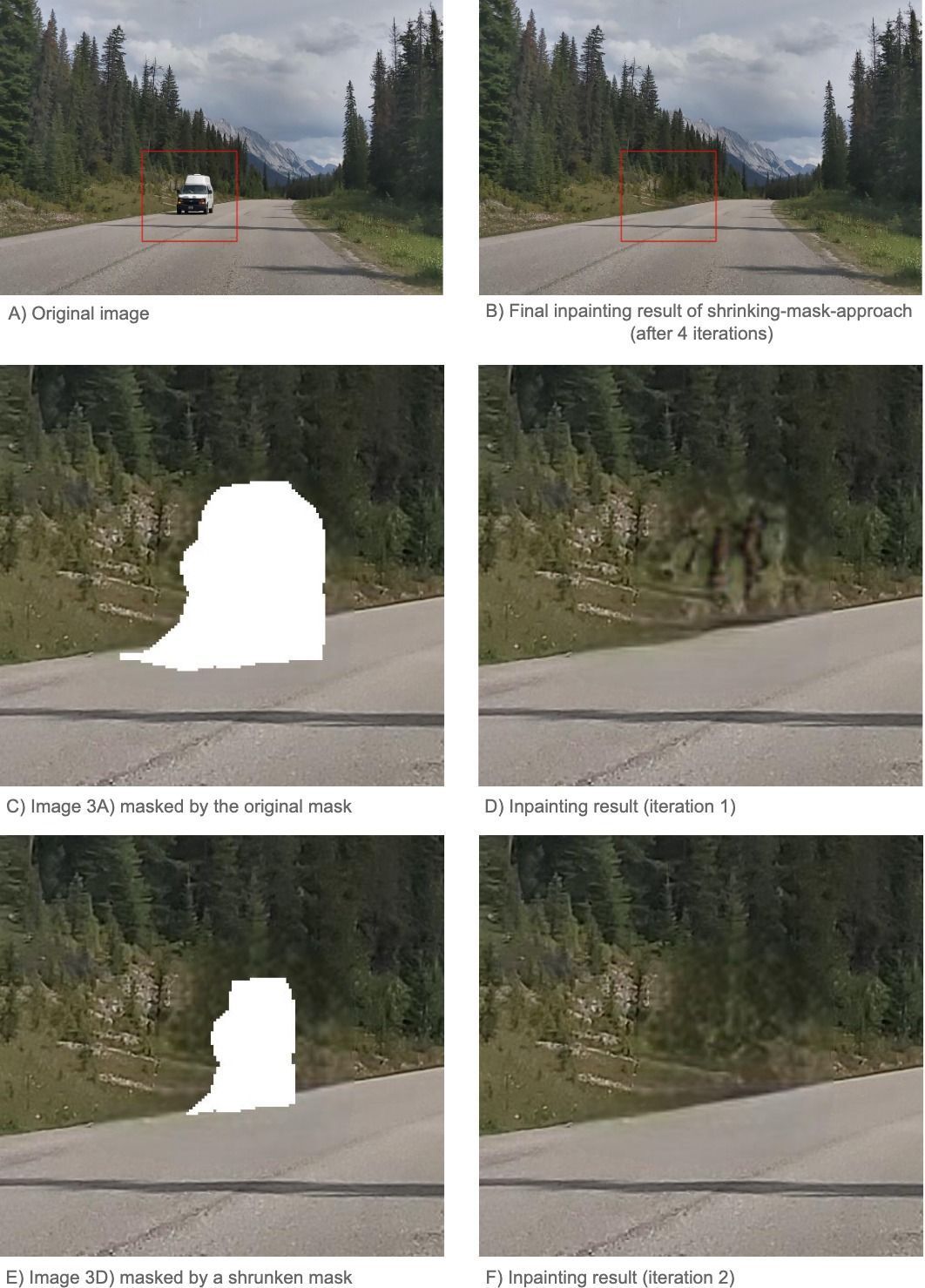

Shrinking-Mask-Approach

While the inpainted area mostly yields realistic-looking content for the more marginal regions, the performance decreases strongly towards the center, cf. Figure 3D. We especially noticed this behavior for larger masks. Conclusively a recursive inpainting procedure with an iteratively shrinking mask, cf. Figure 3E, seems to be a reasonable approach. With this concept, we try to improve the inpainting results in a progressive manner starting from the boundary to the center of the masked regions while utilizing the generated information of the preceding recursion, cf. Figure 3F.

To us, it was essential to have a dynamic method that allows the handling of all mask forms and sizes. Therefore, we decided to apply an erosion kernel to the original mask in a recursive fashion until it is fully eroded. The amount of shrunk masks determines the number of inpainting performed by the network.

Two-Step-Approach

While investigating and testing various quality optimization steps, we also fed high-resolution images into our model and discovered that the results for smaller masks were convincing. That led us to the hypothesis that not the resolution but rather the number of pixels to reconstruct seems to be the limiting factor. This finding served as the basis for our two-step-approach.

Briefly, the approach works as follows. In the first step, we perform inpainting on a downscaled high-resolution image while applying the original mask. In a second step, we transfer the model output of step one into a higher resolution and perform inpainting again. This time we apply a modified mask containing only small coherent mask regions, for which we exploit the provided higher resolution context information.

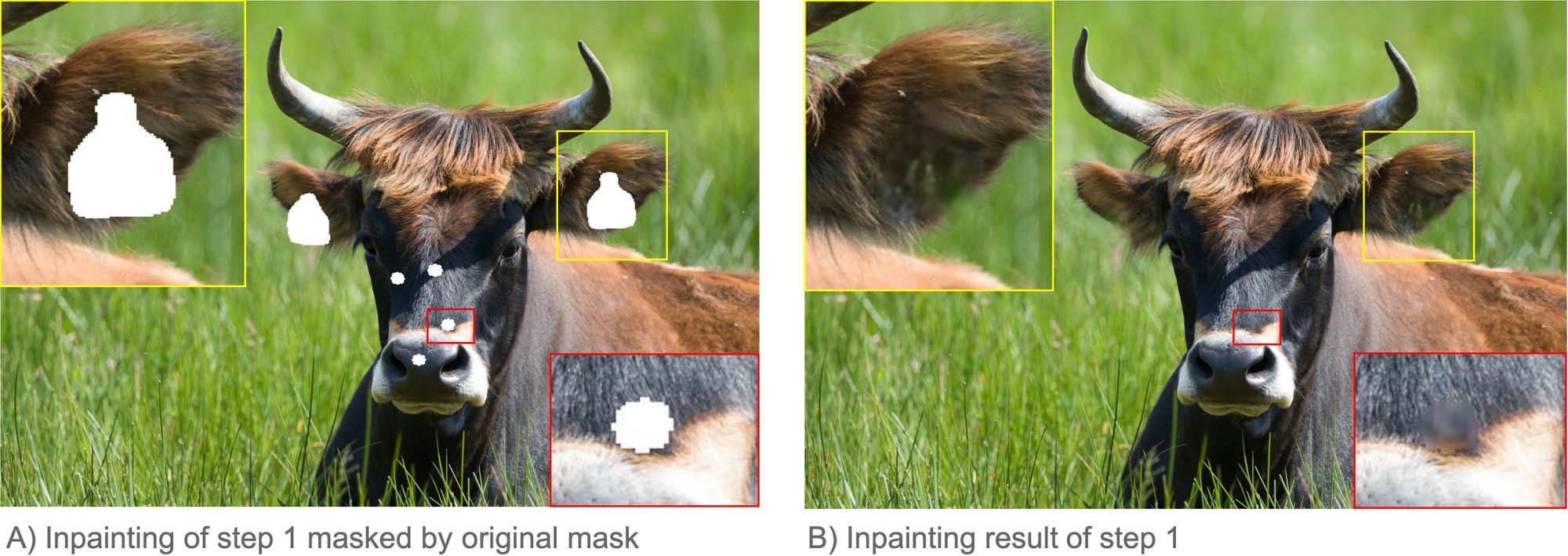

In more detail, the first step is characterized as the baseline approach, cf. Figure 5: We scale the masked image down to the training resolution of 512x680 pixels and fill up the missing information.

Optionally, the shrinking-mask-approach can be applied in the first step.

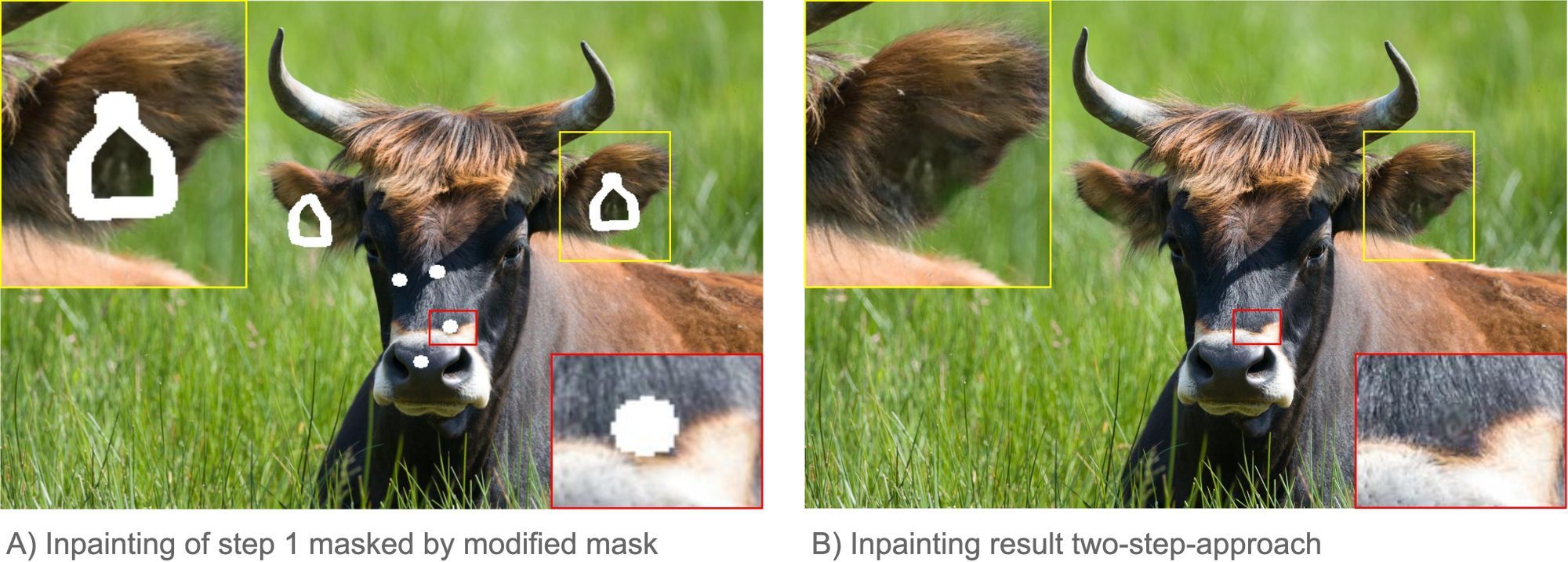

In the second step, we quadruplicate the output of step 1 to a resolution of 1024x1360 pixels. To prevent the resolution loss for unmasked regions caused by this upscaling, we stitch the generated content into the same sized (downscaled) input image. The resulting image serves as the model input for step 2.

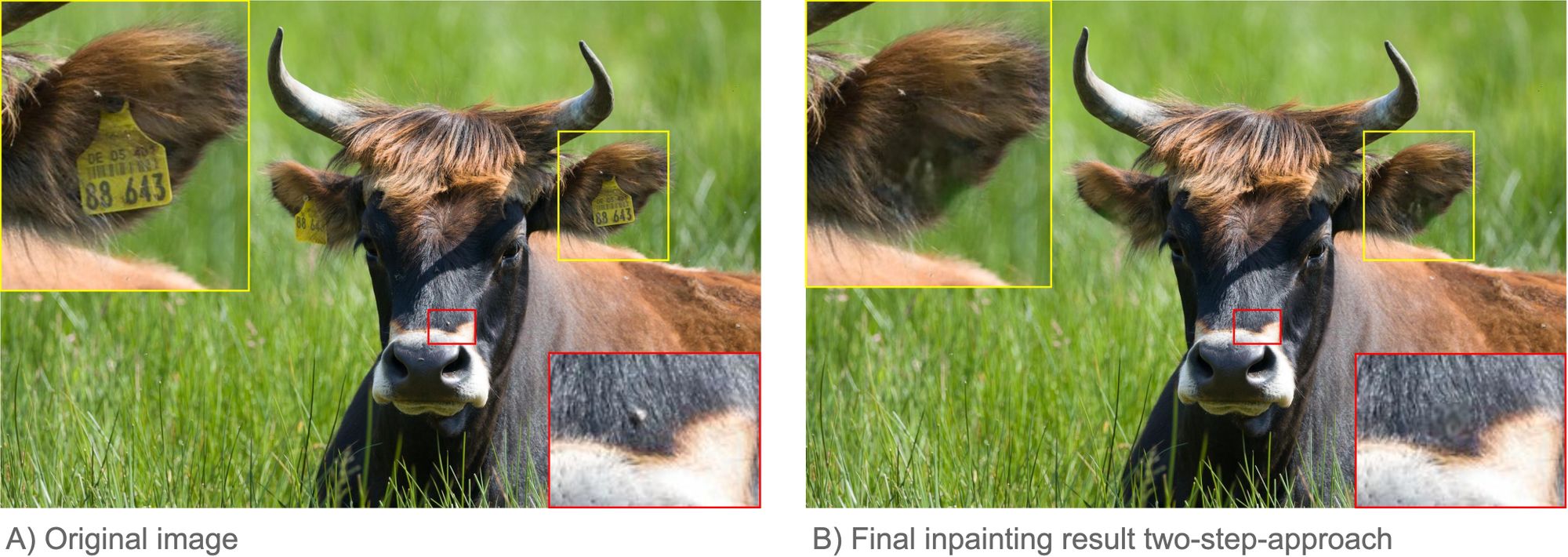

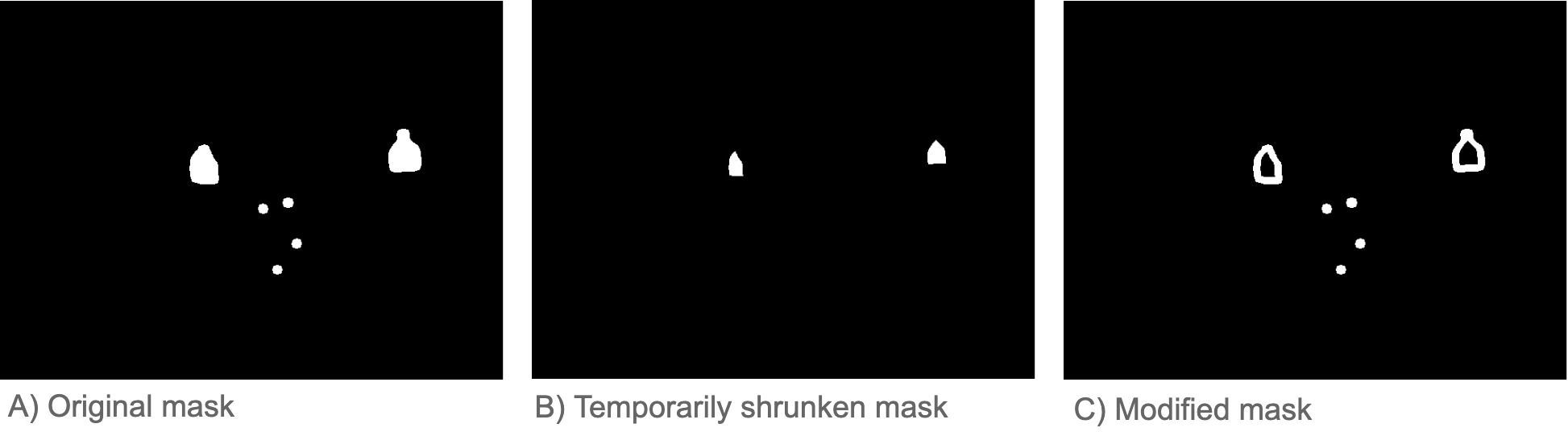

To avoid/reduce image artifacts in the subsequent inpainting process, we modify the original mask to contain only the small mask regions and the boundaries of the large mask regions. In detail, we temporarily shrink the mask with an erosion kernel to ablate small mask segments and the marginal areas of larger mask sections, cf. Figure 6B. Finally, we calculate the difference between the original mask and the altered mask, resulting in our desired modified mask, cf. Figure 6C.

By re-inpainting, we double the resolution of the generated content for the small contiguous mask regions, cf. Figure 7A bottom right, as well as for the masked boundary areas, cf. Figure 7A upper left. Moreover, through the latter, we achieve smoothing of the intense decay in resolution between the unmasked regions and the generated content arising from step 1. Finally, we scale our image back to the original input resolution and stitch the generated content to the original image to maintain the original resolution for unmasked areas.

Conclusion

For us, it was impressive to see how AI-based inpainting can successfully and deceptively realistic fill in missing information. Not only the consideration of structural (semantic) content is an advantage compared to conventional approaches, but especially the decreased demand on required hardware. In our view, this opens up the opportunity to reach a much larger group of users of inpainting algorithms: in place of using powerful hardware and professional software, mobile devices could achieve small but decisive changes.

In summary, we have dealt with the application of high-resolution images, which is undoubtedly gaining in importance due to the ever-improving smartphone cameras. Processing high-resolution images entail an increasing number of pixels to "inpaint" and could further lead to quality as well as performance issues. Thus, we decided to improve the output of low-resolution networks and to provide them with more information to support a subsequent upscaling procedure.

We have implemented two different approaches, shrinking-mask and two-step-approach that can be applied independently or in a combined manner. It turned out that both methods subjectively increased the image quality. However, this comes along with higher computational demands, as models are applied multiple times.

Overall, we think that the combination of these two approaches will represent a good toolkit for AI-based high-resolution image inpainting. But we’ll keep an eye on the upcoming scientific developments.