Here's the crux of product development in the age of LLMs: how much can AI truly accelerate the development process?

We have seen videos of solo developers building small apps entirely with AI with just a few prompts. But how does it scale to more complex development projects? As LLMs rapidly evolve, their scope and impact will only increase.

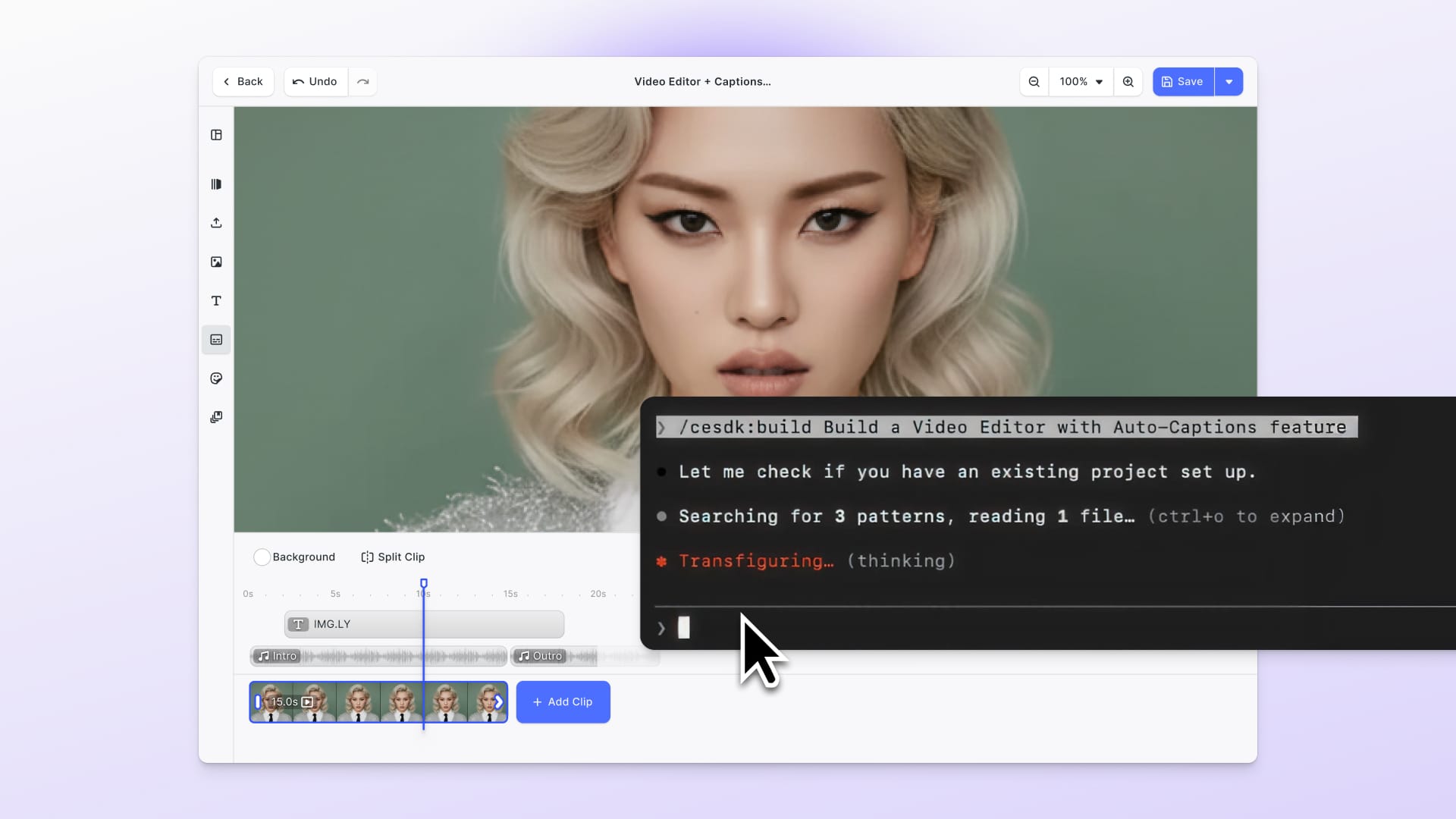

That’s why I regularly challenge myself to build a small project with the help of AI. I’m a prime candidate to test the AI productivity boost: a jack-of-all-trades (and a master of none) with a background in both design and engineering, yet no hands-on experience in the past five years. My latest challenge? Build a web-based short video generator within one day.

In this post, I’ll share the most intriguing takeaways from tackling this project.

Why a Short Video Generator?

Why focus on this idea? It’s simple: to ride the wave of a new trend. A format called "faceless" short videos is gaining traction among creators on platforms like YouTube and TikTok.

What’s fascinating about these videos is their automation: an LLM generates a script, which is then transformed into speech, images, and text assets using various AI services. These assets are automatically assembled into a cohesive video.

The general concept is compelling: It’s still generative content, but mixed with classic video composition techniques. This approach offers greater accuracy, consistency, and control over pure generative AI.

The potential to automate video production at this scale is exciting. Add its relatively low complexity and high production value, and it became the perfect topic for my challenge.

Enter CE.SDK

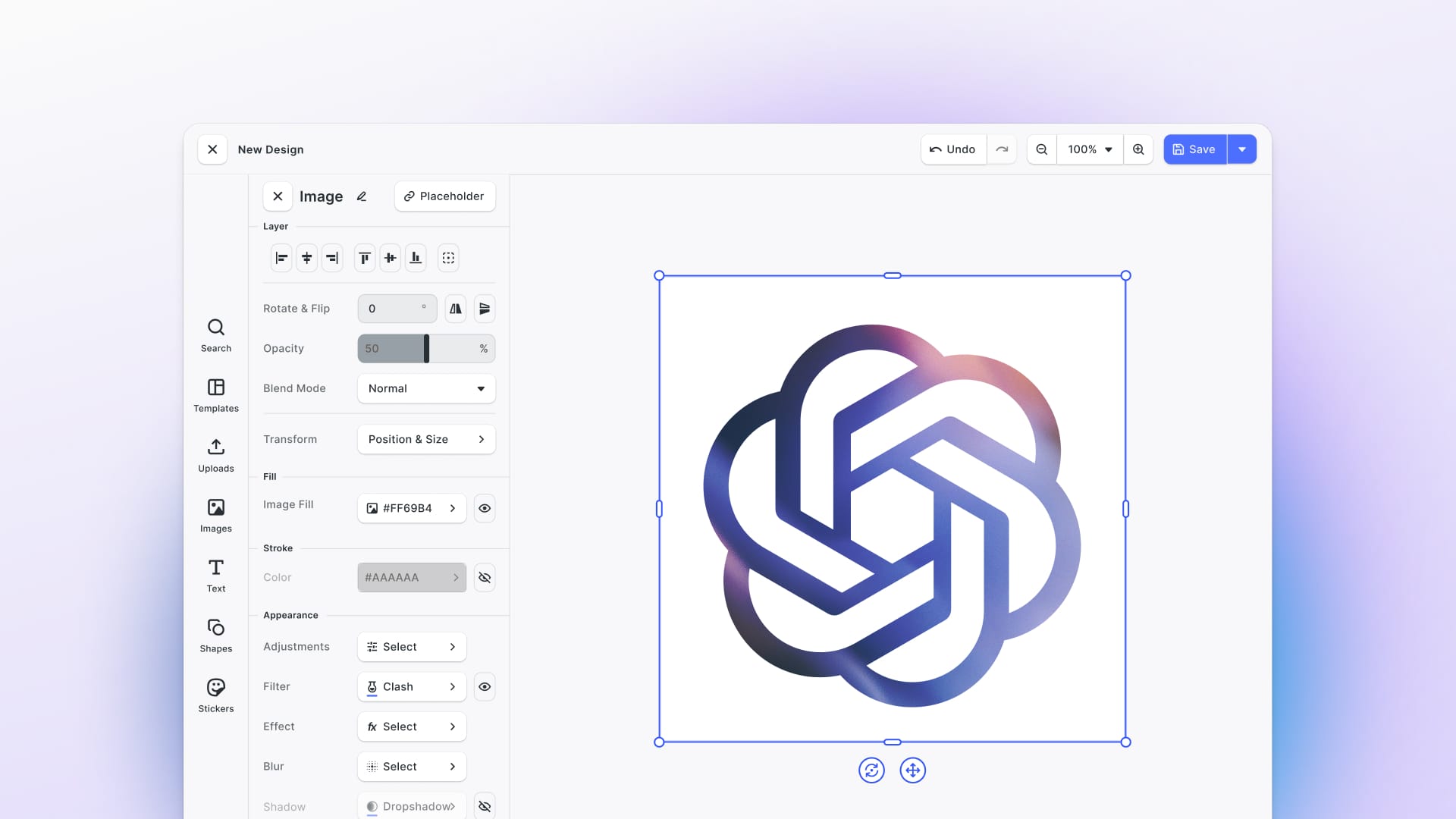

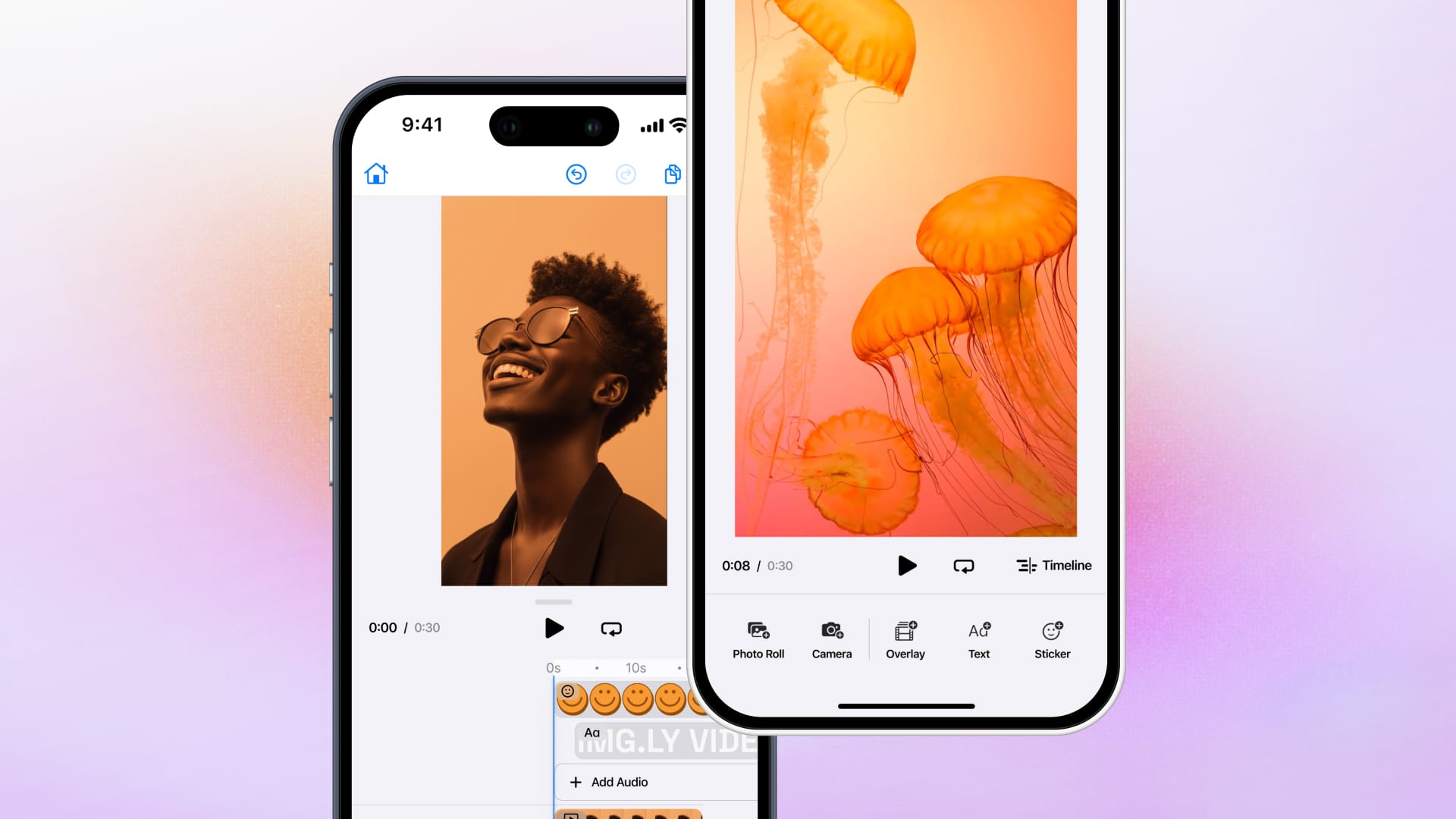

Another reason I chose this challenge was its compatibility with CE.SDK, our design and video editor library. CE.SDK offers a robust editing toolkit that integrates into any product with just a few lines of code. Its features, like headless mode, are ideal for automating workflows like video generation.

Most faceless video services use React-based video generation and achieve fair results. However, using CE.SDK instead of a react-based library could potentially boost the overall experience with three critical improvements:

- Editable Outputs: This is huge. Full automation often needs human adjustments for fine-tuning. CE.SDK enables automated video generation while allowing manual refinement of the results.

- Enhanced Visual Quality: CE.SDK has its own rendering pipeline, allowing for more nuanced visual effects and animations. When you’re competing against others in this space, it can make a huge difference if you’re able to produce higher fidelity in the visual output.

- Visual Design Workflow: Create design components or even entire templates visually, and then use them via code. This authoring workflow can be extremely helpful in creating rich, interesting designs for the generated videos.

The Ground Rules

To keep the challenge focused, I set strict rules:

- Time Limit: Spend no more than 12 hours on the challenge.

- No Manual Coding: Avoid writing any code yourself—everything should be built through conversations with AI.

- Trust the AI: Do not read or analyze code generated by the AI. Rely entirely on its decisions.

- Skip External Research: Do not read or explore the APIs you intend to use. Instead, provide links to the AI and let it determine how to use them.

- Compare AI Performance: Alternate Claude Sonnet 3.5 and ChatGPT o1 for code generation to evaluate which performs better.

The Tools & Workflow

Code Editor: Cursor

Built on VSCode's foundation, Cursor stood out as the only editor offering both an integrated chat interface and the ability to switch between different LLMs. However, with GitHub's recent significant updates to Copilot, I'll switch to VSCode with Copilot for future challenges.

UI Prototyping: Claude Artifacts

Rather than building the entire project in my code editor, I chose to prototype the UI directly through Claude's web interface. The benefits were immense:

- Instant results: To create an artifact, Claude streamlines development by automatically writing and compiling code while leveraging essential UI libraries and components. This automation eliminates setup time and technical overhead, allowing me to focus purely on design iterations.

- Instants Variations: Claude enables rapid prototyping through parallel conversations. When a design direction didn't quite work, I could simply start a fresh conversation with modified requirements and evaluate a new prototype. This approach helped me develop three viable concepts quickly - a pace that would have been impossible in a traditional code editor.

- Quality of execution: Claude transforms rough concepts into polished, intuitive interfaces. Its suggestions often surpassed my initial ideas, offering sophisticated solutions I hadn't considered.

- Keep it clean: By prototyping outside the code editor, I kept the main project's codebase clean and focused. This separation prevented the accumulation of experimental code and maintained the clarity of our primary development environment.

Quickly prototype your interface with Claude Artifacts.

APIs

Key APIs used in the project included:

- Script Generation: Claude Sonnet 3.5 vs various ChatGPT models.

- Image/Video Assets: Fal.ai Flux models.

- Speech Synthesis: ElevenLabs.

Building the App: Divide and Conquer

After having prototyped the UI, I started to chat with the LLM inside the code editor so that it can code the app. To work with the AI efficiently, I followed a divide-and-conquer approach. Rather than simply asking it to “build me a video app,” I broke down the problem into manageable steps:

- Generate a video script

Create an AI prompt that includes user input and examples of the desired output format. Pass this prompt to the LLM API. - Parse the script to generate assets (speech, images, text)

Parse the LLM’s response to extract image prompts and speech paragraphs. Send these to their respective APIs. - Compose the final video

Load all the generated assets into a predefined template to generate the finished video through the CE.SDK library.

After completing these steps, I was finally able to generate my first fully automated videos! With a few more tweaks and additions, I had an MVP ready within twelve hours.

The final result: A Short Video Generator

There are still some missing features, partly because I spent a significant amount of time refining the prompt to generate the video script. I also had to bend the rules occasionally—sometimes the LLM would hit a wall, and I had to read or write small snippets of code.

Key Takeaways

Engineering Knowledge Is Essential

You should have some engineering background to achieve the AI productivity boost in development.

- AI doesn’t solve everything for you. You are still the architect. You provide a lot of input and guidance. AI often needs to be pointed to the right strategy. Foundational knowledge of computer science is hugely advantageous for working with AI effectively.

- As mentioned, I had to read and write a few lines of code myself. Without coding experience, I would have probably not been able to progress, as the LLM was not able to.

- The getting started experience is nowhere close to novice-friendly. How do you get started with a new project in a code editor that actually requires you to do the setup manually? My workaround was to create an empty project, and then ask the LLM to instruct me to use a boilerplate for react. Again, this is engineering knowledge, any novice would have hit a wall already at this point.

Claude Outperformed ChatGPT

Claude was a clear winner in the side-by-side comparison, because of three reasons:

- Claude Artifacts was a game changer for UI prototyping.

- It was generally better at writing and understanding code. Difficult to quantify, but in some cases Claude fixed the mess ChatGPT left in the code

- Claude can process URLs, which makes working with APIs much smoother.

Who would have thought new LLMs would catch up to OpenAI so quickly after they released the first version of ChatGPT?

Complexity Slows AI

The more code in my project, the slower the overall progress. LLMs struggled with the growing complexity. Their context windows filled more quickly, and their responses became increasingly unreliable. At some point, it becomes extremely difficult to make architectural changes, especially if this affects multiple parts of the app. When trying to fix errors, you’ll often find yourself in a whack-a-mole game. While the AI would resolve one issue, it would inadvertently introduce new problems elsewhere, creating an endless loop of fixes and regressions.

Ultimately, the time invested in this challenge was well worth it. While LLMs can’t build products end to end on their own, they can significantly streamline product development when paired with the right human collaboration. The real question is whether development teams are ready to adapt their habits and explore new workflows to boost productivity.

Next Steps

This challenge has inspired me to refine and expand on this project. Future iterations will focus on harnessing CE.SDK’s unique features to push the boundaries of automated video generation.

Stay tuned for part two of this series—there’s much more to explore!

UPDATE: Read part two - a cookbook how to build your own short video creator!

Over 3,000 creative professionals gain early access to our new features, insights and updates—don't miss out, and subscribe to our newsletter.