The explosion of video content across platforms from social media and streaming services to e-learning and surveillance has made manual video processing increasingly impractical. As organizations manage thousands of clips, the need for automation has become critical to handle transcoding, resizing, thumbnail generation, and other repetitive tasks efficiently. Manual workflows quickly become bottlenecks, consuming engineering time and introducing inconsistencies that slow down production and distribution.

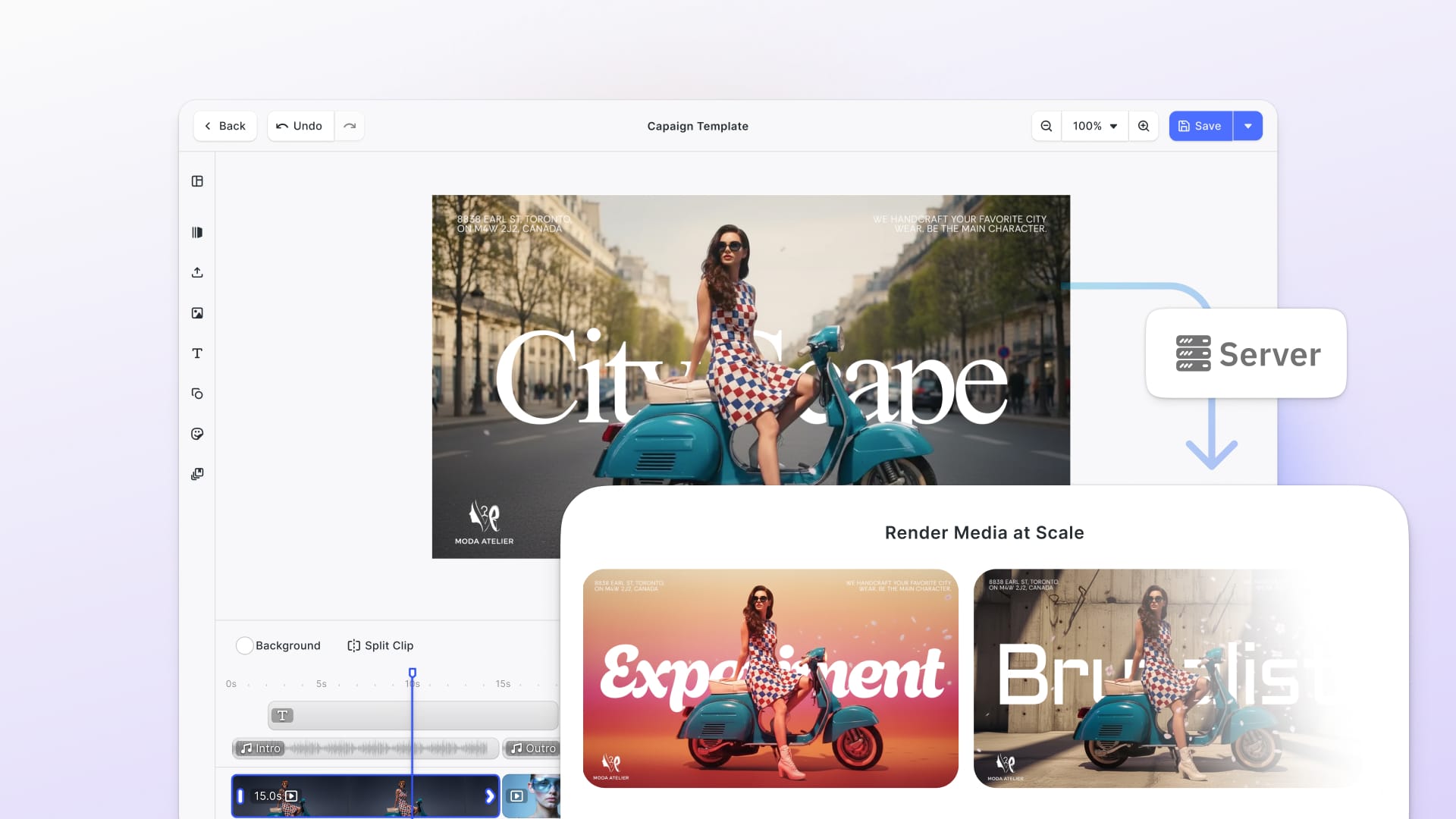

In this article, you’ll go through a Docker-based batch video processing system powered by FFmpeg, complete with both a REST API and a command-line interface (CLI). This setup enables scalable, reproducible, and automated media operations suitable for local or cloud environments. You can deploy this server on cloud providers – our guides for running FFmpeg on AWS Spot Instances and running FFmpeg on GCP walk you through setup on each platform. By the end, you’ll understand how to design infrastructure that can process hundreds of videos reliably and in parallel. To follow along, you’ll need a basic understanding of Python, Docker, and common video formats such as MP4 and MOV. For a primer on running FFmpeg inside a Docker container, see our Docker guide.

You can clone this github repository and follow the article for a working Docker-based batch video processing system with FFmpeg handling the heavylifting.

Understanding Video Processing Fundamentals

FFmpeg is a powerful command-line toolkit capable of reading, transcoding, filtering, and muxing virtually any media format. It’s the foundation of countless commercial encoders, streaming platforms, and post-production tools. With a single command, you can convert between codecs, trim segments, extract audio, or even apply complex filter graphs and when these commands are chained together in scripts or APIs, they form the backbone of scalable media pipelines. For readers unfamiliar with FFmpeg, our Ultimate Guide to FFmpeg covers the basics and advanced techniques.

At its core, most video workflows revolve around a few recurring operations: transcoding (changing codecs or bitrates), compression (optimizing size for delivery), watermarking (branding or copyright protection), and thumbnail extraction (visual previews for cataloging). Each operation impacts performance differently depending on your hardware setup. CPU-based processing offers flexibility but can be slow for high-resolution workloads, while GPU acceleration through NVENC or VAAPI dramatically speeds up encoding at the cost of hardware dependencies. Managing memory and I/O efficiently is crucial, as reading and writing large video files can quickly become a bottleneck in batch pipelines.

Understanding the file format landscape and the quality–size trade-off is key to producing professional results. Modern codecs like H.264, H.265 (HEVC), and VP9 dominate for their balance of efficiency and compatibility, typically wrapped in container formats like MP4, MOV, or WebM. Tools like FFmpeg expose fine-grained control through parameters such as CRF (Constant Rate Factor), bitrate targeting, and encoding presets, allowing you to tune the ideal compromise between visual fidelity and storage or bandwidth requirements.

The FFmpeg Batch Video Processor builds on these fundamentals to demonstrate how FFmpeg can power fully automated, scalable media workflows. Packaged as a Docker-based, production-ready system, it automates complex operations, such as transcoding, compression, watermarking, and thumbnail generation across multiple videos simultaneously. By combining a FastAPI-powered REST API with a command-line interface (CLI), it provides flexible control over batch processing, progress tracking, and workflow automation. With built-in profiles, concurrent workers, and robust logging, it turns FFmpeg’s raw capabilities into a modern, reproducible video processing pipeline suited for professional production, web delivery, and large-scale content management. For tutorials on cropping and trimming at the client level, see our Flutter guide.

Now lets dig deeper into the project.

Architecture Deep Dive

System Components Overview

The system is modular by design, separating API logic, job management, and media processing into distinct layers for clarity and scalability. Lets look into the different layers of the project now:

- FastAPI REST interface (api.py)This file defines the FastAPI application for our batch video processing API powered by FFmpeg. It provides endpoints for managing video processing jobs, profiles, workflows, and file uploads. Key components include:The API supports operations like transcoding, compressing, adding watermarks, and more, using profiles and workflows for predefined configurations. With the help of this API, you can create a dashboard to monitor the jobs and manage them too.

- Global Instances:The

VideoProcessorexecutes FFmpeg-based tasks like transcoding and compression, while theJobQueuemanages concurrent job execution through a worker pool. TheConfigManagermaintains processing profiles and workflows, ensuring consistent parameters across all jobs. - Endpoints:The API provides endpoints for managing jobs, profiles, and workflows, as well as handling uploads and system monitoring. You can create, list, retrieve, cancel, and download jobs; access predefined profiles and workflows; upload videos for processing; check system health and queue stats; and retrieve metadata for uploaded videos.You can go to http://localhost:8000/docs#/ when the docker container is running to see a detailed description of every endpoints.

- Startup/Shutdown Events:Initializes and cleans up the job queue.

- Global Instances:The

- Job queue management (job_queue.py)This file implements the job queue system for managing and processing video processing tasks asynchronously. It includes:This system makes batch video processing possible, supporting asynchronous execution and state persistence.

- Job Class:The class represents a video processing task with attributes such as

id,input_file,output_file,operation,parameters,status,progress, timestamps, and error details. It supports operations like thumbnail generation, GIF creation, and audio extraction, automatically generating output file paths. Ato_dictmethod is included for easy serialization of task data. - JobQueue Class:The class manages a queue of video processing jobs using a pool of asynchronous workers. It allows adding, retrieving, listing, and canceling jobs, filtering by status, and starting or stopping workers as needed. It also tracks key statistics such as total, completed, and failed jobs and can persist the queue state to a file. Workers process jobs asynchronously, updating progress and handling errors throughout execution.

- Worker System:This component processes jobs using a

processorobject that defines the specific operations to perform. It runs these operations within a thread pool to prevent blocking the event loop, while continuously updating job progress and handling both successful completions and failures.

- Job Class:The class represents a video processing task with attributes such as

- Video processing engine (video_processor.py)This file defines a

VideoProcessorclass that provides a set of methods for video processing using FFmpeg. Key features include:The class uses subprocesses to interact with FFmpeg and supports progress callbacks for real-time updates.- Video Metadata Extraction:

get_video_info: Extracts video details like duration, size, resolution, codec, and frame rate.

- Video Operations:

transcode: Converts videos to different formats/codecs.compress: Reduces video size with optional scaling or target size.add_watermark: Adds a watermark to a video at specified positions.generate_thumbnail: Creates a thumbnail image from a video.extract_audio: Extracts audio from a video in various formats.create_gif: Converts a video segment into a GIF.concatenate_videos: Merges multiple videos into one.trim_video: Trims a video to a specific duration or time range.

- FFmpeg Execution:

_execute_ffmpeg: Handles FFmpeg command execution with progress tracking and error handling.

- Video Metadata Extraction:

- Configuration system (config_manager.py)This file defines a

ConfigManagerclass that manages video processing profiles and workflows stored in a YAML configuration file. Key functionalities include:This class is essential for managing reusable configurations for video processing tasks.- Configuration Management:Loads profiles and workflows from a YAML file (config/profiles.yaml) and saves any updates or new definitions back to the same file, ensuring configurations remain persistent and easily editable.

- Profiles:The module allows retrieving specific profiles by name and listing all available profiles with their details. It validates each profile to ensure required fields like

operationandparametersare defined, and also supports creating custom profiles dynamically for flexible and reusable video processing configurations. - Workflows:The module can retrieve a specific workflow by name and list all available workflows along with their descriptions and the number of jobs they contain, providing a clear overview of predefined processing pipelines.

- Error Handling and Logging:Logs warnings for missing profiles/workflows and errors during file operations.

Design Patterns

The FFmpeg-batch system applies several proven software design patterns to ensure maintainability and scalability. It follows a producer–consumer model through its job queue: the FastAPI layer acts as the producer by submitting jobs, while asynchronous worker processes act as consumers, executing FFmpeg tasks concurrently. The system also supports profile-based processing templates, where predefined configurations, such as codec settings, bitrates, and resolutions allow teams to standardize repetitive tasks. On top of that, workflow orchestration ties multiple operations together, enabling complex pipelines (for example, transcoding followed by thumbnail extraction) to run automatically in sequence.

Scalability Considerations

From a scalability perspective, the project uses worker pools to parallelize workloads efficiently, ensuring multiple videos can be processed simultaneously without overloading the system. Effective resource management including queue throttling, memory optimization, and controlled CPU utilization keeps performance consistent even under heavy load. This modular and scalable design makes it straightforward to extend the system horizontally, whether deploying additional Docker containers, scaling Kubernetes pods, or integrating cloud-based distributed processing.

Setting Up the Development Environment

Before building or running the FFmpeg-batch system, it’s essential to configure a proper development environment that mirrors production conditions. The setup ensures smooth testing, consistent builds, and predictable behavior across machines.

Prerequisites Installation

You’ll need to have Docker, Python 3.11, and FFmpeg installed. Docker handles containerization and makes it easy to run the application and its dependencies consistently. Python 3.11 provides the runtime for FastAPI and background processing components, while FFmpeg performs the actual video operations. Once installed, you can verify your setup using commands like docker --version, python3 --version, and ffmpeg -version.

Project Structure Walkthrough

The project is organized into clear modules for scalability and maintainability. Core components include api.py for the REST interface, job_queue.py for task management, video_processor.py for media operations, and config_manager.py for loading and saving profiles and workflows. Additional directories like data/input/ and data/output/ are used for managing uploaded and processed files.

Configuration Setup

The system uses environment variables (.env) to manage runtime settings like the maximum number of workers or file storage paths and a profiles.yaml file to define reusable encoding profiles and workflows. By editing these configurations, you can customize how the system behaves, whether you’re running quick local tests or deploying to a cloud environment.

Docker Compose Deep Dive

The Docker Compose configuration defines a single service, video-processor, which builds from the local Dockerfile and runs the FFmpeg batch processing API. It maps port 8000 from the container to the host, allowing you to access the FastAPI interface externally. Several volumes are mounted including ./data/input, ./data/output, and ./data/logs to persist uploaded files, processed results, and runtime logs outside the container lifecycle. The ./config directory is also mounted to provide access to YAML configuration files for profiles and workflows. Environment variables such as MAX_WORKERS, API_HOST, and API_PORT define runtime parameters, while PYTHONUNBUFFERED=1 ensures immediate log output. Finally, the restart: unless-stopped policy guarantees the service automatically restarts if it crashes or the system reboots, making the setup robust for both local development and long-running batch processing tasks.

Development vs. Production Setup

In development mode, you can use live-reload for FastAPI and local volumes for quick iteration and debugging. In production mode, Docker Compose can be extended to include load-balanced worker containers, persistent storage, and monitoring tools. This setup enables horizontal scaling and high availability while maintaining the same base configuration used during development ensuring consistent results from your laptop to the cloud.

Configuration Management & Profiles

A core strength of the FFmpeg-batch system lies in its flexible configuration layer, which defines reusable processing templates and multi-step workflows through a YAML-based profile system. This allows developers and media teams to standardize encoding, compression, and transformation parameters while maintaining full control over quality, size, and performance trade-offs.

Profile System (config/profiles.yaml)

Profiles define how a video should be processed, specifying the operation type (e.g., transcode, compress, extract_audio) and its parameter set (e.g., codec, preset, CRF, or scaling). Each profile acts as a self-contained template that can be reused across multiple workflows or customized for specific business needs.

Common profiles include:

web_optimized— transcodes videos to H.264 with a medium preset and CRF 23 for web streaming.social_media— compresses videos to 720p targeting 50 MB, ideal for platforms like Instagram or TikTok.mobile_optimized— scales videos down to 480p and limits file size for efficient mobile delivery.audio_mp3andaudio_aac— extract audio tracks at defined bitrates and formats for podcasts or companion files.thumbnailandpreview_gif— generate visual previews or short animated snippets to enhance content catalogs.

Creating custom profiles is straightforward: teams can define their own processing templates to meet business-specific requirements such as watermarking, caption embedding, or broadcast-compliant exports. The YAML format also supports profile inheritance, enabling developers to extend base configurations (like web_optimized) with minor overrides, ensuring consistency while reducing redundancy.

Workflow Orchestration

Beyond individual operations, the system supports multi-step workflows that chain multiple profiles into a single automated pipeline. For example, the social_media_package workflow combines compression, thumbnail generation, and GIF preview creation, all triggered as one batch job. Similarly, the archive_package workflow transcodes video at high quality, extracts MP3 audio, and generates a thumbnail for long-term storage. You can create your own workflows with your own custom profiles that fits your usecases and use them for your applications with this project.

Workflows also enable parallel execution, allowing independent operations (such as audio extraction and GIF creation) to run simultaneously for faster turnaround. More advanced pipelines can incorporate conditional logic, such as adapting encoding settings based on input resolution or target platform requirements. Additionally, batch operations can be applied to entire directories, processing hundreds of videos in a consistent, repeatable manner.

Together, the profile and workflow system transforms FFmpeg-batch from a simple video processor into a scalable, declarative media automation framework, one that adapts to varied production environments and evolving content demands.

Command Line Interface

The Command Line Interface (CLI) in the FFmpeg-batch system offers a fast, intuitive way to trigger and monitor batch processing jobs directly from the terminal. Built on top of the FastAPI backend, the CLI (cli.py) simplifies automation for developers, video engineers, and content teams who prefer command-line tools or need to integrate FFmpeg-batch into larger production pipelines.

CLI Design (cli.py)

The CLI is designed around clear, operation-based command grouping. Users can run commands like process, upload, list, or download to manage the entire lifecycle of a batch job, from input submission to result retrieval. Each command maps closely to the API endpoints, ensuring consistency between programmatic and manual use.

In interactive mode, the CLI provides progress bars and real-time feedback for active jobs, displaying percentage completion, estimated time remaining, and current processing status. This helps operators monitor workloads without constantly querying the API.

The CLI also supports batch operations, allowing users to process entire directories with a single command (e.g., cli.py process-folder ./input_videos --profile web_optimized). It automatically applies the chosen profile to all files and stores results in the designated output directory. In CLI, you can run the below docker-compose command to execute it in a docker container:

# Using a profile

docker-compose exec video-processor python cli.py profile /data/input/video.mp4 web_optimized --output /data/output/video.mp4

Finally, the CLI integrates seamlessly with shell scripting and automation workflows, perfect for CI/CD pipelines, cron jobs, or custom scripts that need to trigger video processing as part of larger media workflows. You can also create custom operations like the one given below which transcodes with custom parameters:

# Transcode with custom parameters

python cli.py create /data/input/video.mp4 transcode \\\\

--params '{"codec":"libx264","preset":"slow","crf":18}'

Conclusion

The project demonstrates how even complex, large-scale video operations can be automated using open-source tools like FFmpeg, FastAPI, and Docker. By abstracting individual processing tasks into profiles and workflows, it enables reproducible, scalable, and platform-agnostic video processing pipelines from content creators automating their daily exports to enterprises handling thousands of assets.

Whether you deploy it locally or in the cloud, this architecture can serve as the foundation for more advanced systems like adding GPU acceleration, distributed job queues, or integration with cloud storage. The full source code is available on GitHub, so you can clone, modify, and extend it for your specific production needs.