Last November, we released Portrait, an iOS app that helps create amazing, stylized selfies and portraits instantly.

With over a million downloads and many more portrait images created, we feel that the idea and vision of Portrait was more than confirmed. The central component of Portrait is an AI that is trained to clip portraits from the background, a technique we are eager to further improve and refine. In fact, Portrait helped us to explore a novel technique for image editing, as we were able to leverage a new powerful data set in photography: depth data.

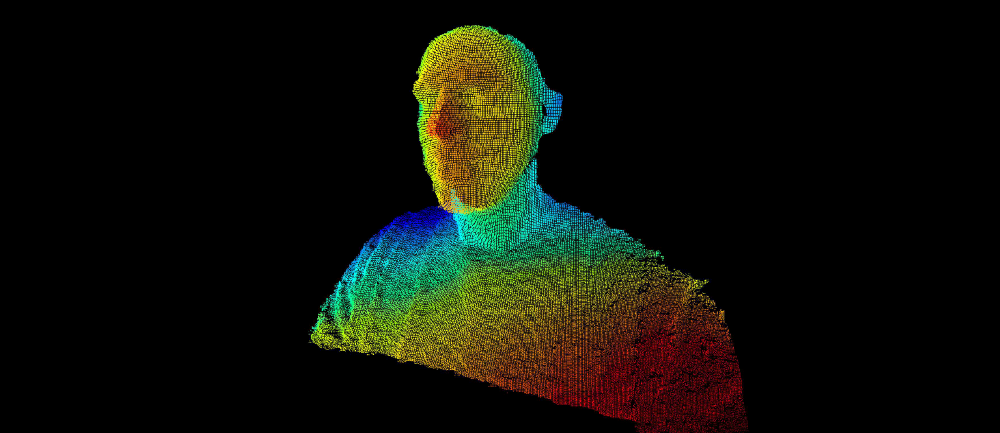

We began feeding our AI models with the depth data from the iPhone Xs TrueDepth camera and had one goal in mind: to infer depth information for portrait imagery, or bringing three-dimensionality into a two-dimensional photo. Along the way, we created a new architecture concept, that allows performance and memory improvements through modularizing and reusing neural networks.

In the following article, we’d like to present some of our results along with the insights we made.

The New Cool: Depth Data

The usage of depth data in image editing initially became available with the iPhone 7 Plus when Apple introduced ‘Portrait Mode’. By combining a depth map and face detection, the devices are able to blur our distant objects and backgrounds, mimicking a ‘bokeh’ or depth of field effect, which is well known from DSLRs cameras.

While the actual implementation varies, all major manufacturers nowadays offer a similar mode by incorporating depth data into their image editing pipeline. This is either achieved through the conventional dual or even triple camera on the back of a phone, dual-pixel offset calculations combined with machine learning or dedicated sensors like Apples TrueDepth module. In fact, for a modern flagship phone, some sort of depth based portrait mode is almost a commodity.

From a developers perspective, things look a little different: Depth data became a first-class citizen throughout the iOS APIs in iOS 11 and such data is now easily accessible on supported devices. Android users obviously have access to depth data as well, either by utilizing multiple cameras or by Googles dual-pixel based machine learning approach, seen in the newer Pixel 2 phones. But contrary to iOS, Android doesn’t yet offer a common developer interface to access such data. In fact, developers aren’t able to access any of the depth information Google or other manufacturers collected within their camera apps. This means developers would either need to implement the algorithm to infer depth from two images themselves or try to rebuild Googles sophisticated machine learning powered system. Neither of these options is practical and probably not even possible given the usual limitations to camera APIs.

So although being quite common, depth data isn’t as easily accessible for developers as one might think. Right now you’re out of luck on Android, dependent on hardware on iOS and even then limited to the 1.000$ flagship if you’re interested in depth for images taken with the front camera. And last but not least, across all devices and platforms, there is no way for you to generate a depth map for an existing image.

Deep Possibilities

Despite the restrictions, we decided to first explore the power of depth for image editing, as depth data provides many new exciting creative possibilities:

If we have a depth map for a given image, our editing possibilities are increased dramatically. Instead of a 2D image, a flat plane of color values, we suddenly have a depth value for each individual pixel, which translates into a 3D landscape highlighting distinct objects in the foreground and a clear indication of background.

Depth-aware Editing

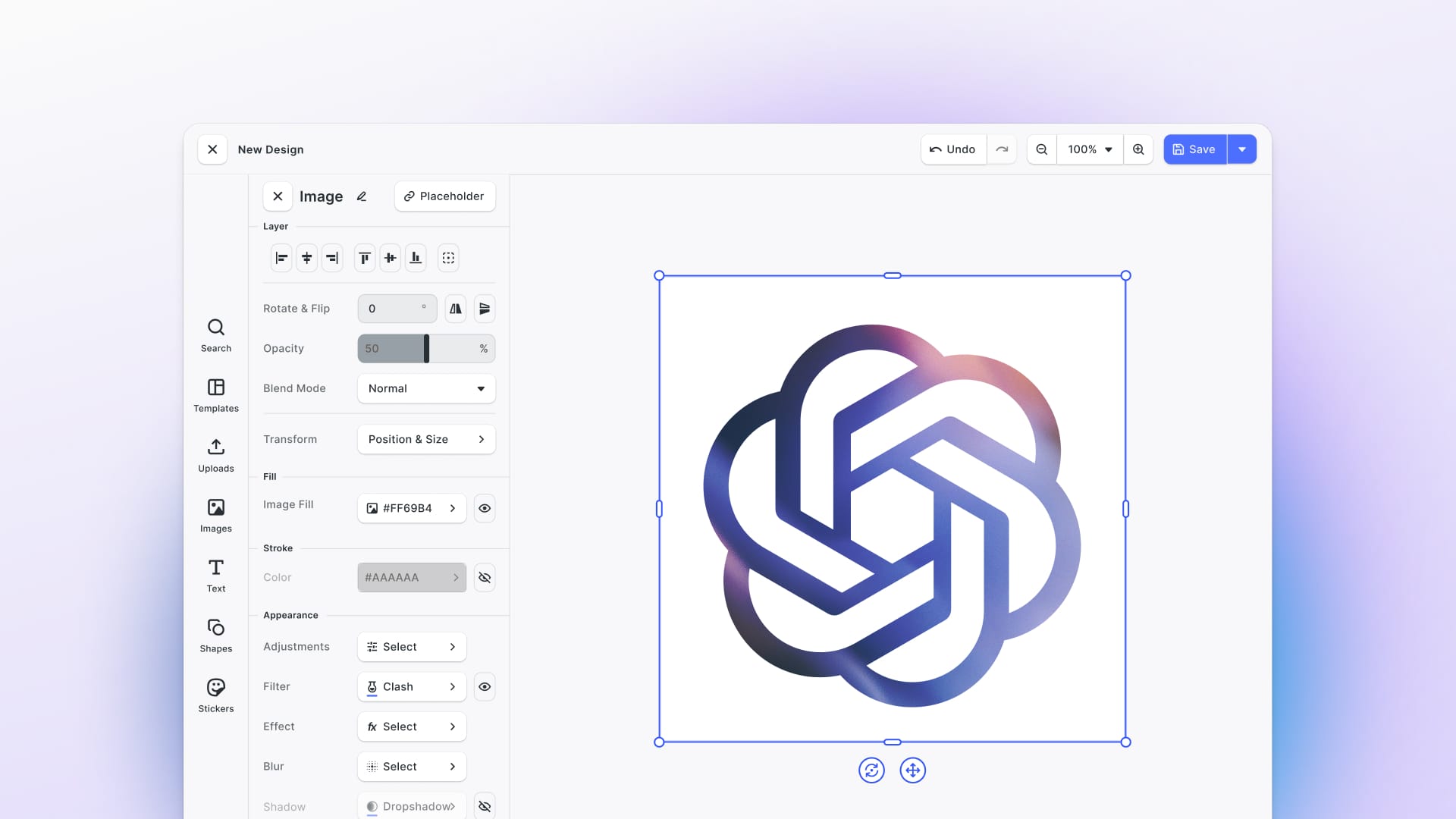

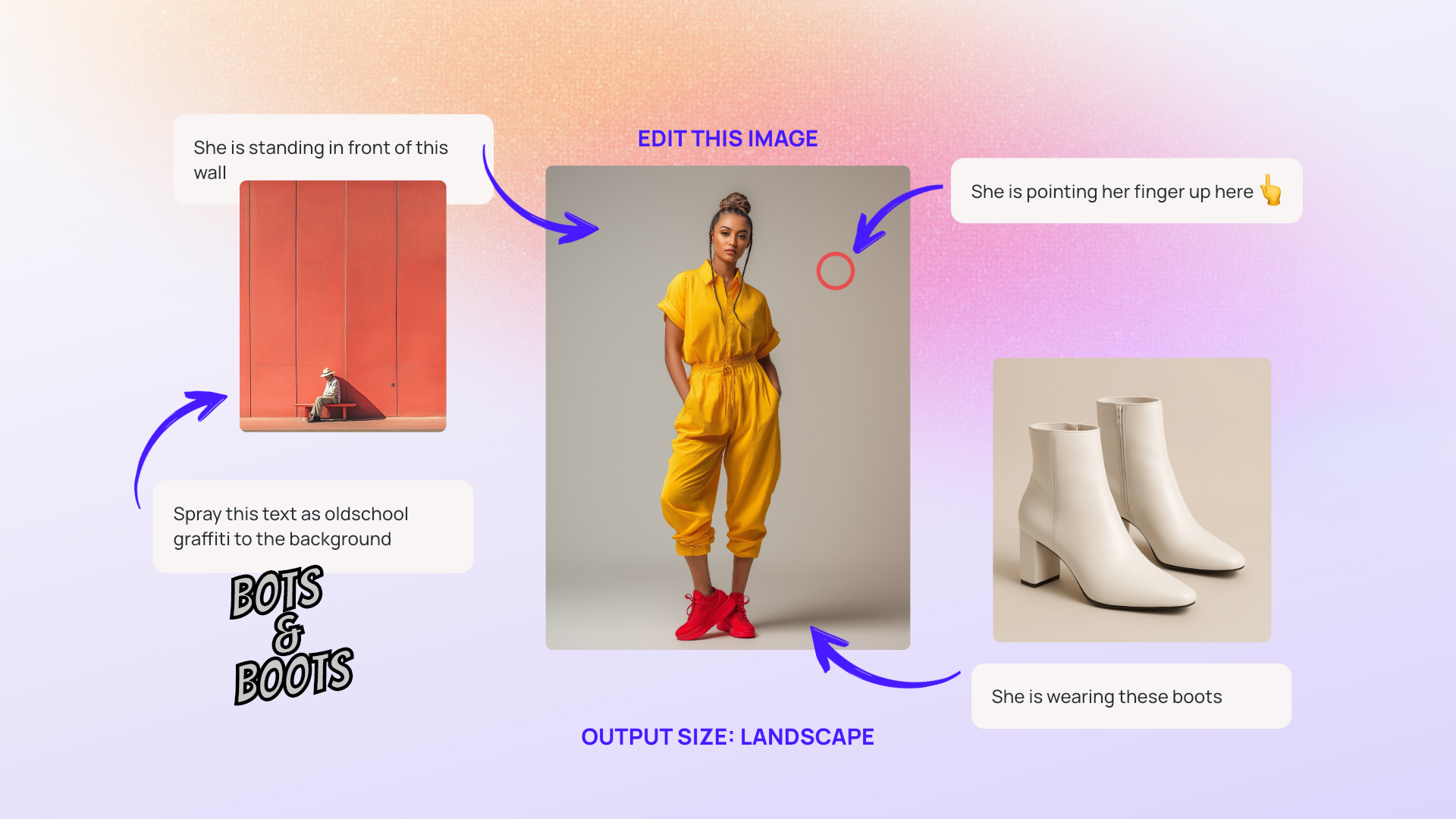

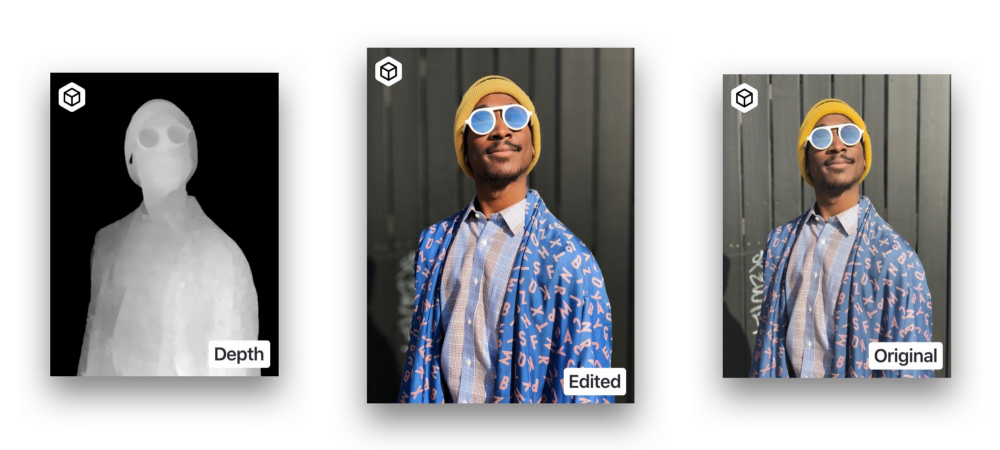

Instead of relying on color and texture differences to determine fore- and background, one could literally edit these regions individually. This allows adjustments like darkening the background while lightening the foreground, which makes portraits ‘pop’. If we’d be able to generate a high-resolution depth map, we could easily replace the AI currently used in Portrait and would allow even more sophisticated creatives. Thanks to the new APIs, there are already some awesome iOS apps available that specialize in depth based editing. One famous example is Darkroom with their “depth-aware filters”:

Depth of Field Effects

As a depth of field or bokeh effect was the initial motivation for Apple to incorporate depth sensing technology, it is one of the most obvious applications. Depth is crucial for such an effect, as the amount of bluriness of any given region directly depends on its distance to the camera lens.

3D Asset Placement

As mentioned above, a depth map gives us a 3D understanding of the image. We’re able to tell if subject A is positioned in front of or behind subject B. This allows placement of digital assets like stickers or text in a ‘depth-aware’ fashion, but could also be used to apply ‘intelligent’ depth of field, e.g. a bokeh effect that ensures all faces are in focus.

Enter Deep Learning

Motivated by the possibilities enabled by depth maps, we were wondering if we could bring this magic to any type of portrait image. We consulted existing literature on depth inference and found various papers¹ and articles on the topic, some of which even presented results that seemed sufficient for our use cases. In our case, we didn’t need accurate, as in ‘this pixel is 30cm in front of the camera’, results, but we were only interested in getting the general distance relations correct. For us, knowing that region A was slightly behind but definitely way in front of region B was enough to generate a visually pleasing effect and by constraining our domain to portrait imagery, we were able to further reduce the tasks complexity.

Given our experience with deep learning and our current focus on introducing machine learning powered features to the PhotoEditor SDK, we immediately decided to tackle the new challenge with deep learning or more specifically convolutional neural networks. Having a huge dataset of image and depth map pairs available, made this choice even easier. We stuck to a system similar to our previous segmentation model but decided to put more emphasis on allowing the reuse of individual parts, as this would come in handy when adding additional features in the future. To achieve this, we created a new modularized neural network approach named Hydra, which will be presented in an upcoming blog post.

During development, we followed our tried and tested workflow of starting with a complex custom model, which is then tweaked and refined to match our performance requirements while maintaining the prediction quality we need. Once that was done, we had a fast and small model, trained on thousands of iPhone front camera selfies and capable of inferring high fidelity depth maps from a plain RGB image in under a second.

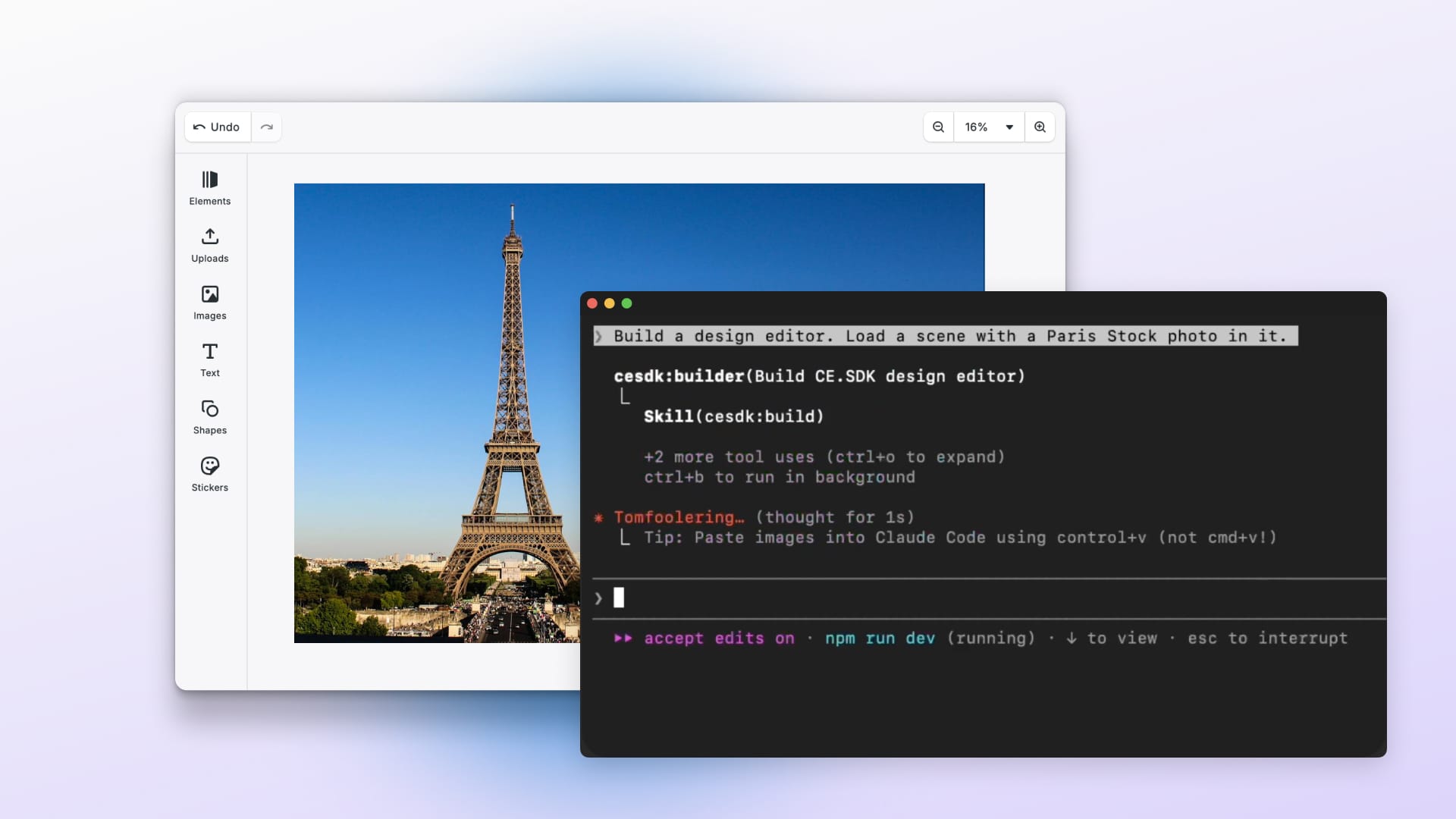

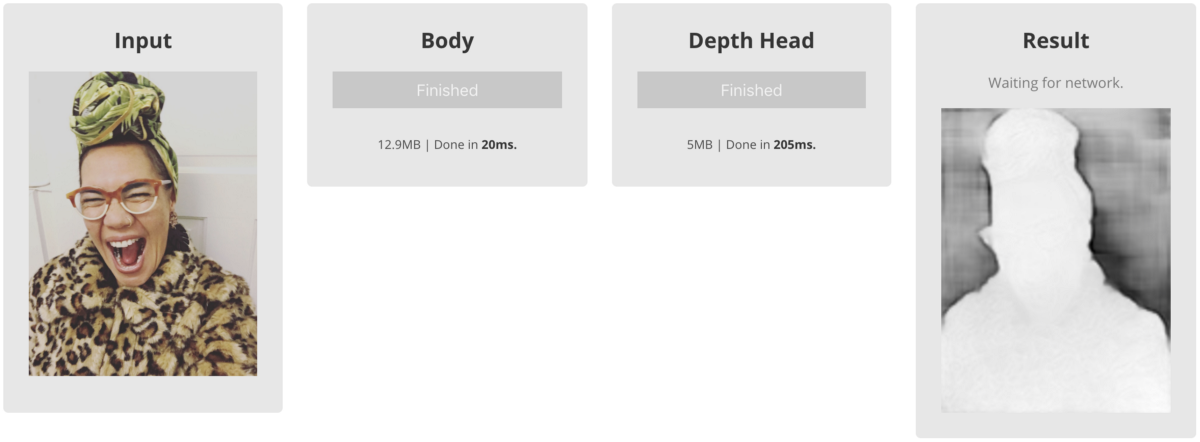

The Prototype

After creating a small model capable of inferring depth maps for any given portrait image, we immediately wanted to evaluate its performance in a ‘real-world’ environment. We decided to build a prototype that applies a depth of field effect to a portrait image, by using the model and its outputs. With our long-term goal of deploying the model to iOS, Android and the web in mind, we built the prototype using TensorFlowJS to explore this newly released library. Our browser demo consists of a minimal ‘Hydra’ implementation with individual modules, one for extracting features and one for the actual depth inference, which can both be executed individually.

While being optimized for performance and memory footprint, the trained weights of the model still add up to ~18MB, which we will improve by further fine-tuning or even applying pruning or quantization. Once the models are loaded, all further processing happens on the device though, so you may try out all the samples without worrying about your data plan.

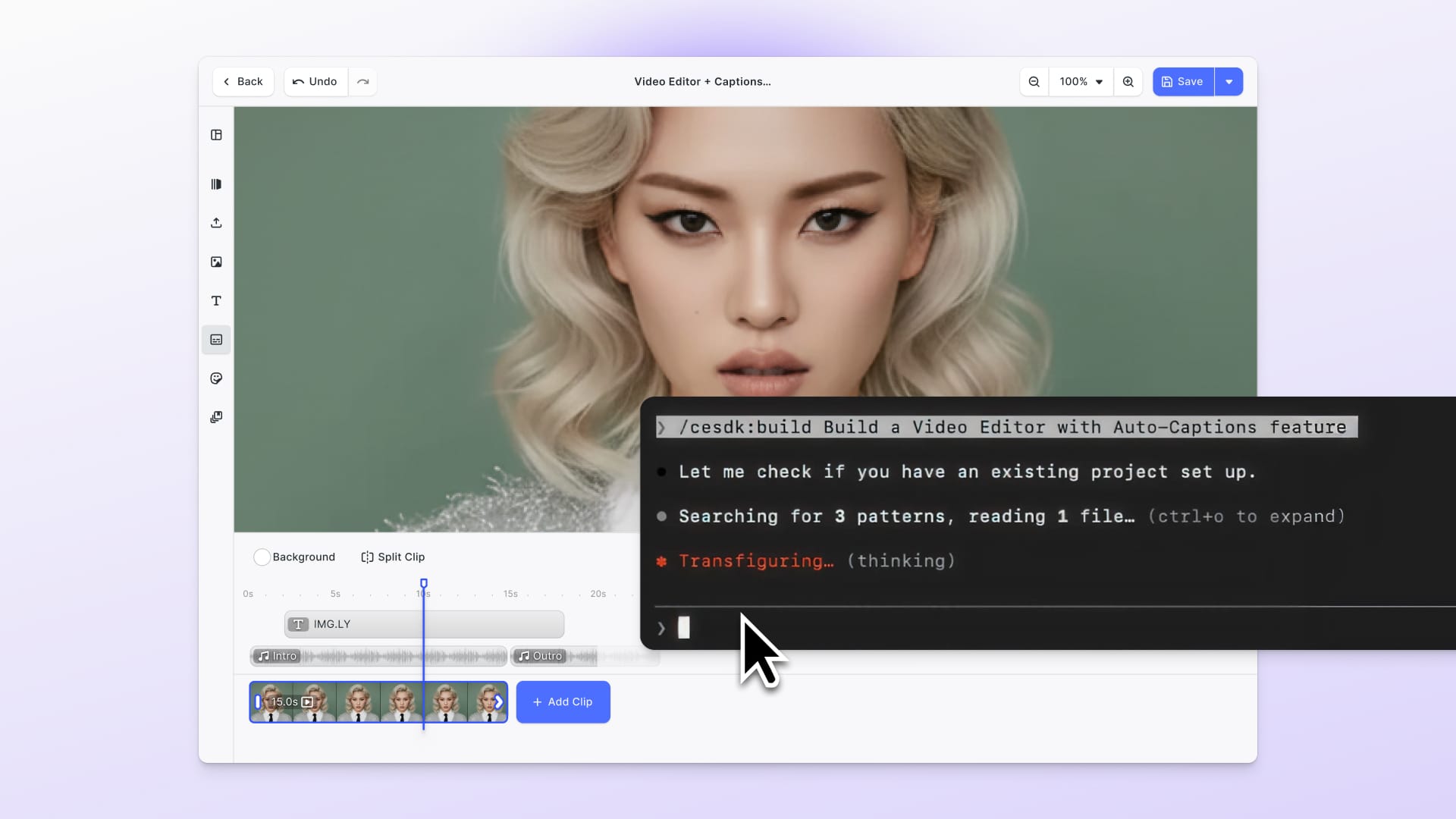

Results

Seeing our vision come to life was quite a stunning experience. Suddenly our browser was able to perform a complex depth of field effect without the need for special hardware, manual annotations or anything else apart from our image. And the best part was manually moving the focal plane through the image, either by sliding or tapping on different regions. Although being trained on ‘just’ selfies the model handles turned heads, silhouettes and multiple people pretty well and isn’t as restricted to its domain as we initially expected.

And while our initial prototype is still weighing in at ~18MB, we’re certain to slim that down further in order to use the model in production. Performance wise we were very impressed with the TensorFlowJS inference speed. Even though everything is happening on the client side and is therefore dependent on the clients hardware, we saw inference speed below one second right of the bat and those greatly improved after the initial run, as the resources were already allocated. While not being immediately helpful for the depth inference part, this allowed us to further confirm our theory behind Hydra: Re-running inference once the necessary resources on the machine have been allocated greatly increases performance and might even allow real-time performance after an initial setup-time.

To summarise, we’re definitely eager to further explore the use of depth data in image editing and think we have found a way to overcome the access restrictions on different platforms and hardware with our custom model. Combined with our new Hydra approach we can see lots of potential features that will delight both our users and customers and we will keep you updated right here.

(1)

The papers we extracted most knowledge for our use case from were:

“Depth Map Prediction from a Single Image using a Multi-Scale Deep Network” (arXiv)

“Deeper Depth Prediction with Fully Convolutional Residual Networks” (arXiv)

Thanks for reading! To stay in the loop, subscribe to our Newsletter.