This isn't an article about AI replacing engineers, it's about discovering what (software) engineering will become when freed from the mechanics of coding itself.

Vibe-Coding, and Vibe-Engineering are omnipresent in my social feeds these days. As with every new trend, it's hard to judge what really works and what is pure marketing hype. But the central question nagged at me: if AI really can do all the coding, what exactly are we humans supposed to do?

Therefore, I wanted to find out for myself. I didn't want to build just a Hello World example, so I pulled an old idea out of the closet and dusted it off.

The Experiment: AI Agents Code, Humans Curate

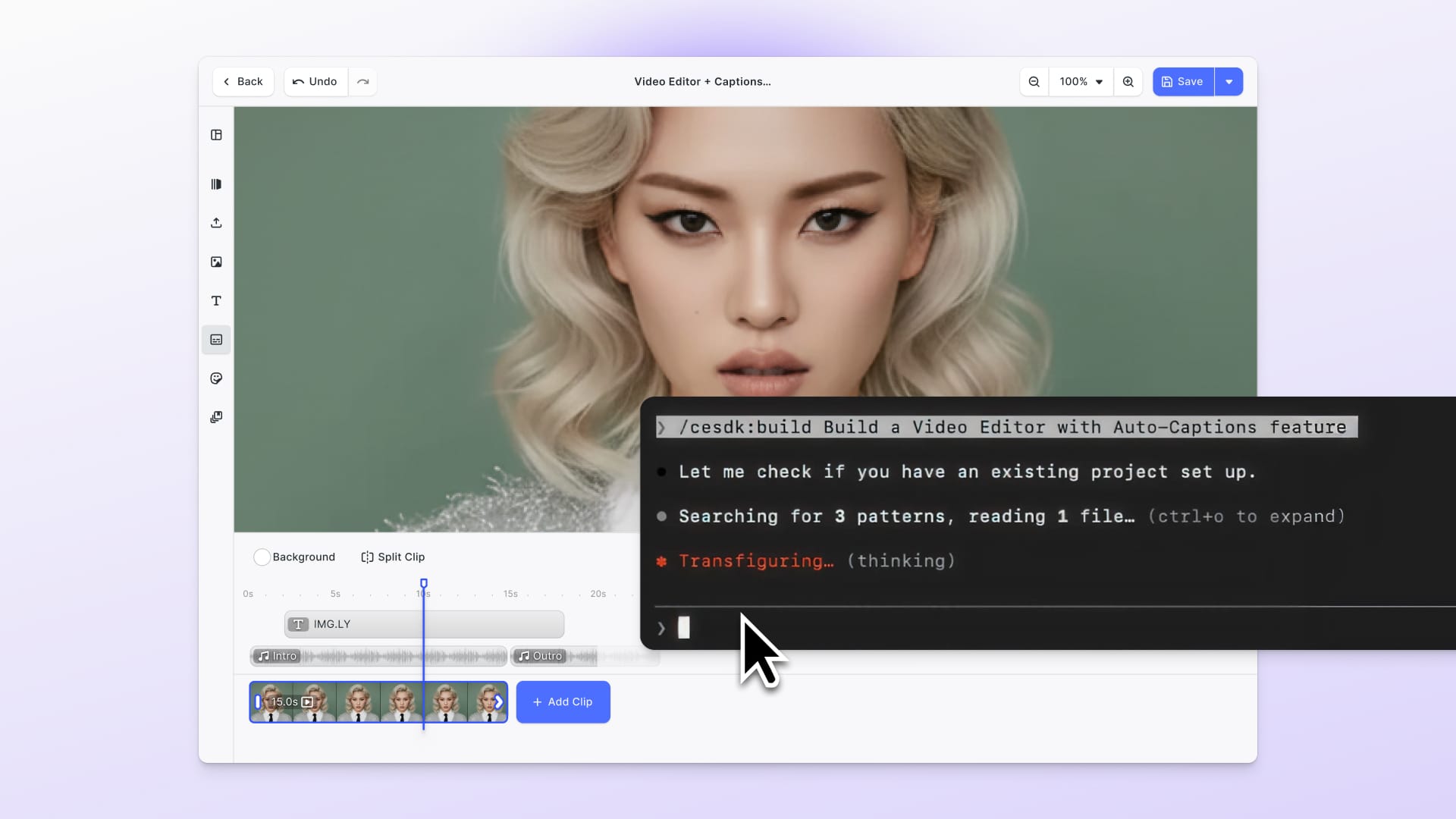

For some time, I had considered porting our IMG.LY background removal library from JavaScript to other platforms using Rust. However, I had postponed this side project due to the anticipated effort involved. This seemed like the perfect project to discover what my role would be in a world where AI does the heavy lifting. To pull it off, I self-imposed only one strict rule:

Hands off the code!

Every commit, every fix, every feature would be handled by an AI coding agent. My role? Being the curator, formulating my intent, reviewing, play-testing, and guiding the agent with feedback.

The agent should be the sole coder, from start to finish.

This was a true hands-off experiment: could an AI build an entire Rust library if I never touched the code?

Is it really something new? After years of handing off development to teammates as a CTO or teaching students, this didn't feel completely different.

So, what happens when you let an AI do all the coding, and you just review and give feedback? What does it actually mean to be a human in this new paradigm?

The result is baffling and gives a glimpse of what we as engineers can expect for the future.

10,000+ lines of production-ready code. Authored by AI, orchestrated by a human.

Seeing is believing? Then explore the results and judge for yourself at github.com/imgly/background-removal-rs.

I open-sourced the full code and the agent rules used during this experience.

Here's what I learned: vibe-engineering an entire Rust library with a coding agent CLI as my only pair programmer.

On a quick note, I chose to use Claude Code with Opus 4 and Sonnet 4, as I was already familiar with them and had achieved the best results so far. The costs were kept in check by using a Claude Max Account, which at the time is around ~€100.

The General Workflow That Emerged

After some initial time to get used to working with an AI agent, I realized that the workflow follows pretty closely a typical software engineering flow, but with an important twist that answers our central question: what do humans actually do when AI codes? While most people focus on the code writing part, I discovered that the quality assurance part becomes the human's primary domain. In my experiments, I realized that to keep the code intact over time, this QA phase is essential—and this is where humans truly shine. Most interestingly, with the right rules and prompting, we can let the agent do the heavy lifting here, too.

Here's how the collaboration actually worked in practice:

Getting Started

The project began with me setting up the environment and configuring timeouts for long-running tasks—because AI agents need time to compile Rust code and run extensive test suites. Together, the AI and I created a CLAUDE.md file with coding standards and rules (you can see the actual rules we used). We also wrote project requirements documents collaboratively, with me providing the vision and the AI helping structure the technical details.

The Development Dance

Once we got rolling, a natural rhythm emerged. I'd describe what I wanted—something like "add support for different image formats with color profile preservation." The AI would then create a detailed implementation plan, breaking down the work into manageable chunks. I'd review this plan, often tweaking the approach or scope, then give the green light.

What happened next was fascinating: the AI would write the code, run correctness checks, update all the tests (unit tests, documentation tests, end-to-end tests), run the linter, analyze coverage, and even update documentation and examples. Sometimes it would run performance benchmarks without me asking—the overzealous builder at work.

My New Role: The Quality Guardian

My job became verifying that the intent was met and the API actually made sense. I'd play-test the functionality, try to break it in ways a real user might, and provide feedback. When something worked well, I'd document what we learned for future development. The AI would then write tests to lock in that new functionality, ensuring we never accidentally broke what was working.

This cycle repeated for every feature, with the AI handling the heavy lifting while I focused on direction, quality, and user experience.

The interesting part was that the more I got used to the workflow, the more I handed off to the agent. The validation and general experience had to be largely influenced or orchestrated by me, but building, testing, and even updating documentation could be delegated. In the beginning, I started just building things, but the more we built, the more the AI agent failed to keep existing functionality intact. With those small context windows, it's kind of understandable after all.

Quality Assurance & Knowledge Capturing Matters More Than Ever

As engineers, we know that at some points we cannot grasp every influence of our code changes on the whole code base anymore. Therefore, we use tests and tooling to help us keep our sanity.

The good thing is that the Rust and Cargo toolchains are exceptionally high quality when it comes to providing the right tooling also for agents.

Due to its lack of long-term context and limited knowledge of all the library's capabilities, the tests and verification mechanisms become crucial. While these are part of the context, they're not stored in the agent's memory but baked into the codebase itself. With the tests and the tooling being available to the agent, it will still make mistakes, as I do, but they can effectively auto-heal or auto-correct their own mistakes with the provided feedback.

I can only advise being even stricter with QA from the very beginning.

Another thing that became apparent very quickly is that, unlike a human colleague with lots of context and a good memory, agents still need significant help to remember things to avoid rediscovering the same information repeatedly. This revealed another crucial human role: context engineering becomes critically important. As such, we engineers become the keepers of institutional knowledge, the ones that help the agent remember what worked and what didn't. I would describe it as knowledge capture, for lack of a better term.

Tips & Tricks

AI Is Eager, Resourceful, and Has Memory Like a Sieve

Here are the key insights I gained from building the library with an AI coding agent:

Code that works: This might seem obvious, but it wasn't clear to me if the library would ever be usable or publishable, but it is. The AI proved it could deliver production-ready code.

Time Effectiveness: While creating the library took around 3 weeks, don't let this fool you. Most of the time, the agent worked alone, and I only had to check in once in a while to review and give feedback. Iteration cycles and rewriting still take time. So I wouldn't say development was faster than if I did it myself, but much more scalable and effective with my own time. Here's what I actually spent my time on: reviewing architectural decisions, ensuring the API made sense, and play-testing the final product.

Stamina: The agent never complained, even when fixing 400+ warnings after setting the linter to pedantic—it pushed through. The AI powered through hundreds of warnings, even clustering them and suggesting which to fix first. To be fair, most humans would have either gone crazy or aborted the effort claiming "it's good enough." This revealed something important: as humans, we don't need to be the ones grinding through tedious tasks anymore.

Overzealous Builder: The agent often built more than I asked for. Scoping and clear implementation plans were essential. This taught me that one of the key human roles is setting boundaries and maintaining focus.

Premature "Done": Sometimes the agent claimed it was finished but left code mocked or incomplete. I learned to always ask, "Is there anything left to implement?" Quality assurance becomes fundamentally a human responsibility.

Old Knowledge: The AI sometimes relied on outdated library knowledge. I had to remind it to check the latest docs and version of dependencies, but when asked, it searched the web, GitHub, crates.io, etc., and gathered all necessary information for using a library. Humans become the validators of information freshness.

Forgetting and Compaction: Larger features grow outside the context window, so the agent would "compact" context, sometimes forgetting important details. Forcing it to write implementation plans in markdown files helps maintain flow and allows better resuming and bookkeeping. We humans become the keepers of long-term project memory.

Helps Establishing Rules: While working with the agent, I saw repeating patterns like it forgetting how to check, build, and test the application, or best practices like using git worktrees. I started adding new rules to Claude.md by myself at first, but quickly realized that the agent is far better at formulating and adding new rules if I asked it to. The human role evolved into being the pattern recognizer and rule creator.

Doesn't Always Obey the Rules: Most of the time, the rules are followed, but occasionally it doesn't follow them and forgets to automatically run all tests. This reinforced that humans must remain the final guardians of process and quality.

Code Deletion: Occasionally, the AI "fixed" issues by removing important code paths. Solution: Insist on never removing functionality without discussion, and build a robust test suite. We become the protectors of existing functionality.

Process Management: The AI struggled with background processes, understanding when to run things in the background, and keeping track of what it started. For example, starting a web server via Bash (see Claude Code Bash tool docs), and then trying to use a playwright tool (see also playwright-mcp) to access this server was blocked by these issues. Complex orchestration remains a distinctly human skill.

Overly Agreeable: Too often, it seems to just agree with whatever I said. For the future, I wish agents wouldn't be so obedient. This highlighted that humans need to be the challengers and devil's advocates in the process.

Further Remarks

After three weeks of this experiment, the patterns became clear. Here's what I learned about the human role in AI-assisted development:

Ensure Verify, and Self-Correct: Always ask the AI to check, format, lint, test, and benchmark its work. Your job becomes quality orchestration, not quality execution.

Always Plan First: Insist on a clear implementation plan before coding begins. Humans excel at high-level architectural thinking and breaking down complex problems.

Write It Down: Let an agent keep notes, todos, and open issues in markdown files. Ideally in the repository itself. Don't rely on the AI's memory. We become the institutional memory keepers.

Scope Features: Break tasks into small, manageable pieces. Feature scoping and boundary setting become a core human skill.

Test Suite is King: A robust test suite prevents accidental "fixes" that remove functionality. Humans become the guardians of existing behavior.

Stay Involved: Fast iteration and discussion with the AI is crucial. Don't go fully hands-off. Active curation and guidance remain essential.

Conclusion

So, what do humans actually do when AI does all the coding?

To answer that, let's look at what AI agents are today:

Agents are super-talented, overly obedient coding assistants with lots of stamina and endless potential.

They're fast, never complain, and iterate like champs. But they need your guidance, structure, and a healthy dose of skepticism.

In the end, the best results come from a true partnership: you plan and guide—the AI builds, fixes, and learns.

If you follow these concepts, then

"Vibe engineering is a highly engaging experience."

And if you're wondering: yes, I'd do it again. But next time, I'll make sure the AI writes everything down too, and I'll put even more effort into validating and securing new features as soon as they're play-tested and verified. The human role isn't disappearing—it's evolving into something more strategic and impactful.

Through this experiment, I've identified three fundamental shifts that define what engineering becomes in an AI-assisted world:

From Execution to Orchestration

We're no longer the ones typing code—we're the conductors. Our new skills revolve around prompting (framing tasks so AI understands our intent), directing workflows (knowing when to use AI versus human judgment), and tool fluency (combining different AI tools to create something greater than their parts).

From Knowledge to Reasoning

AI can recall facts and syntax better than any human ever could. But what matters now is our ability to interpret, contextualize, and make sense of uncertainty—something current AI still struggles with. We become the ones who ask "why" and "what if" rather than just "how."

From Repetition to Adaptation

Skills rooted in routine are increasingly automated. The enduring value lies in adaptive thinking, problem-framing, and reinventing approaches when the usual patterns don't apply. We're the ones who recognize when something feels off, even if we can't immediately articulate why.

Afterthought

During this experiment, I saw that the agent repeated similar tasks over and over again. One thing that struck me was looking up documentation. Whenever something with a third-party library didn't pan out, it started web-searching for info and guides about the library. It rarely started out with reading the Rust docs first, so I had to tell it to use the Rust docs. Therefore, I assume that providing quick access via tools to guides, docs, API references, and examples will spare me some roundtrips, time, and tokens and accelerate the process. I know that there are tools like context7, but it seems to be focused on JavaScript. At least for the Rust ecosystem, the docs are centralized and standardized and can even be used locally. A tool to access those quickly and find things in them would be highly beneficial.

Additionally, for coding projects, there are typical steps to follow to verify if the code is sound. It's probably also best to provide specific tools or workflows to follow these steps exactly. Rules already help with this, though.

Last but not least, a natural next step would be for an agent to analyze its history periodically and provide new memory entries (rules) based on that to reduce repetition and common mistakes. For more details, see the Appendix: Most used prompts section, where I analyzed the top 10 most common prompts to help agents proactively develop useful rules.

If you're curious about improvements, I've put together a wishlist for Claude Code that would make the agent even more useful.

Try It Yourself

If you want to experience the results of vibe-engineering firsthand:

# Clone and explore the codebase

git clone https://github.com/imgly/background-removal-rs.git

git checkout --tag v0.2.0

# Or install the CLI directly

cargo install --git https://github.com/imgly/background-removal-rs.git --tag v0.2.0

Remember: every line of code in this library was written by AI while I played the role of curator, architect, and quality guardian. Judge for yourself whether this new paradigm produces production-ready results.

Appendix

Appendix: Claude Code Wishlist

During the experiment, I encountered some issues whose resolution would improve the agent.

- Project-scoped history (not global).

- Project-scoped todos (not global).

- Project-scoped implementation plans (not global).

- Customizable

/compactprompt or choose other compact strategies. - Improved handling of background processes.

- History analytics with memory proposal.

- Code-specific predefined

Code and Validateworkflows. - Allow forking of multiple agents from a single point in the session history to create multiple trials with the same intent and context.

Appendix: Most Used Prompts

Claude code stores the history under ~/.claude/history as json formats. I asked claude code to read them in and categorize the most used prompts.

| # | Category | % Usage (Count) | Description / Examples |

|---|---|---|---|

| 1 | Other/Specific Instructions | 19.5% | Technical specs, detailed requirements e.g.: "Relax the success metrics", "Use tensor data directly as alpha channel" |

| 2 | Questions | 12.7% | Status checks, clarifications e.g.: "What's next?", "How can I test it myself?", "Do we have any mock implementations?" |

| 3 | Implementation Requests | 11.1% | Direct build/create requests e.g.: "Create a PRD for Rust port", "Make ONNX Runtime injectable" |

| 4 | Simple Responses | 10.0% | Short confirmations, approvals e.g.: "ok", "go for it", specific technical choices |

| 5 | Continuation Commands | 8.4% | Requests to proceed e.g.: "go on" (59 times) |

| 6 | File Operations | 8.3% | File management e.g.: "Write this into PRD.md", "Move packages into crates directory" |

| 7 | Context Summaries | 8.3% | Session continuation due to context limits e.g.: "This session is being continued from a previous conversation..." |

| 8 | Analysis/Review Requests | 7.7% | Requests to analyze/review e.g.: "Analyze my background removal project", "Check the preprocessing" |

| 9 | Bug Fix/Issue Resolution | 4.4% | Problem identification/fixes e.g.: "You mix up release and debug", "This is wrong" |

| 10 | Testing | 3.8% | Running/validating tests e.g.: "Test images are incorrect", "Rerun comparison tests" |

Appendix: Claude Code Settings

In real-world projects, the default timeout of two minutes is not enough. I bumped the timeouts to the maximum values to allow execution of unit tests, E2E tests, benchmarks, and long-running tasks.

BASH_DEFAULT_TIMEOUT_MS: Sets the default timeout (in milliseconds) for long-running bash commands.BASH_MAX_TIMEOUT_MS: Specifies the maximum timeout (in milliseconds) that can be set for bash commands.BASH_MAX_OUTPUT_LENGTH: Limits the maximum number of characters in bash outputs before they are truncated in the middle.CLAUDE_BASH_MAINTAIN_PROJECT_WORKING_DIR: Ensures the working directory is reset to the original after each Bash command.

{

"env": {

"CLAUDE_BASH_MAINTAIN_PROJECT_WORKING_DIR": "true",

"BASH_MAX_TIMEOUT_MS": "3600000",

"BASH_DEFAULT_TIMEOUT_MS": "3600000",

"MCP_TIMEOUT": "3600000",

"MCP_TOOL_TIMEOUT": "3600000"

}

}

Library Capabilities

High-performance Rust library for AI-powered background removal with hardware acceleration, built for

production scale and developer productivity.

Performance Highlights

- Hardware acceleration: CUDA (NVIDIA), CoreML (Apple Silicon), CPU fallback

- Sub-second processing on modern hardware (100-1200ms depending on image size)

- Memory efficient with optimized threading and session reuse

Key Features

AI Models & Quality

- Multiple state-of-the-art models (ISNet, BiRefNet)

- FP16/FP32 precision variants for performance vs. quality

- Portrait-optimized and general-purpose models

- Custom model support via ONNX models from Hugging Face

Format and Color Profile Support

- Input: JPEG, PNG, WebP, TIFF, BMP with ICC color profile preservation

- Output: PNG (transparency), JPEG, WebP, TIFF, raw RGBA8

Integration Patterns

- One-liner API for simple use cases

- Session-based processing for batch operations

- Stream processing from any AsyncRead source

- CLI tool for standalone usage and pipeline integration

Architecture & Platforms

Dual Backend System

- ONNX Runtime: Maximum performance, GPU acceleration

- Tract: Pure Rust, zero external dependencies

Platform Support

- macOS: Apple Silicon + Intel with CoreML acceleration

- Linux/Windows: NVIDIA CUDA + CPU fallback

Developer Experience

Modern Rust Ecosystem

- Async/await native support

- Comprehensive documentation and examples

- Zero-warning policy with extensive testing

- Structured tracing for production observability

CLI Capabilities

- Batch processing with recursive directory support

- Model management (download, cache, clear)

- Provider diagnostics and performance monitoring

- Pipeline-friendly with stdin/stdout support

3,000+ creative professionals get early access to new features and updates—don't miss out, and subscribe to our newsletter.